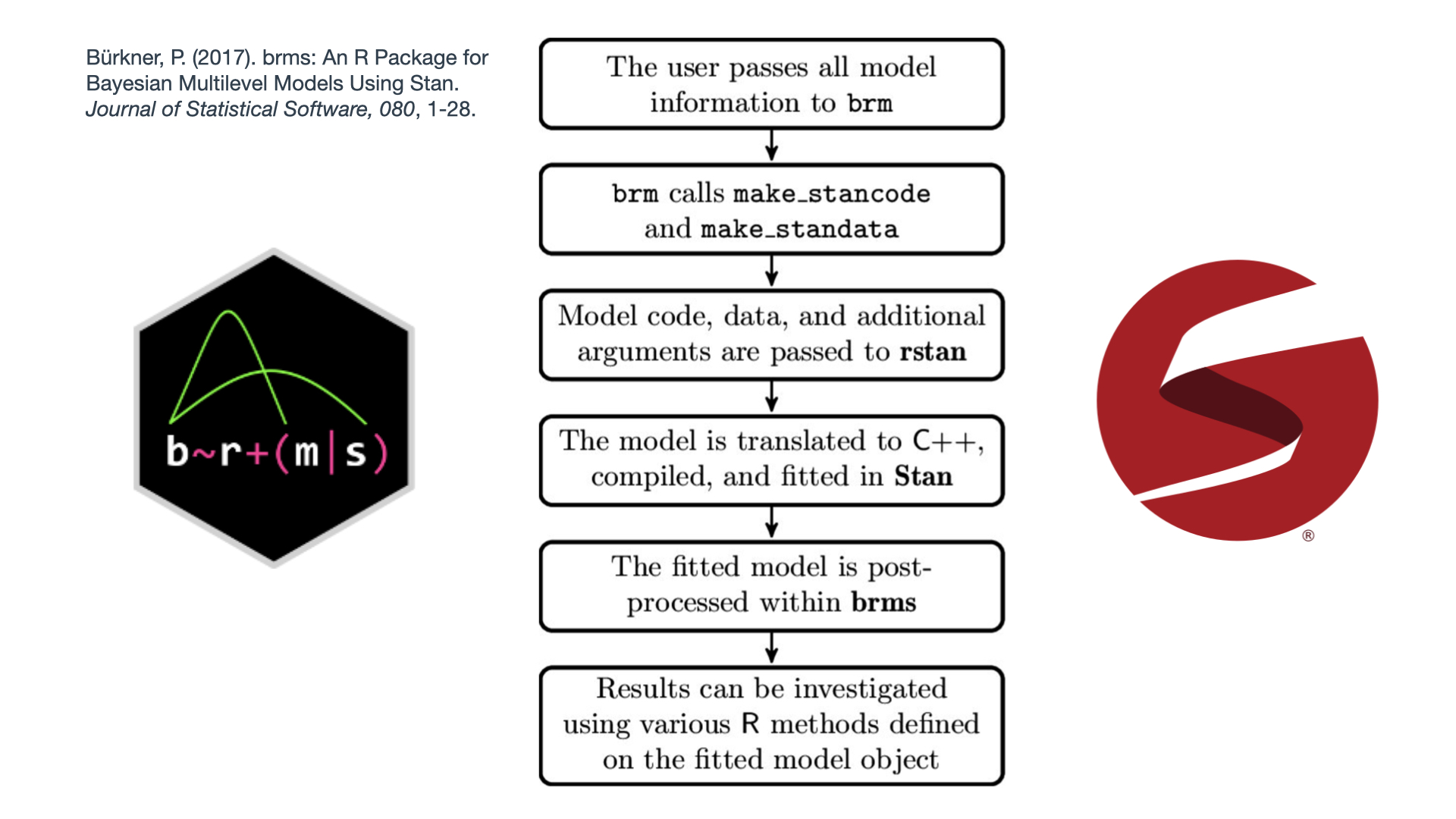

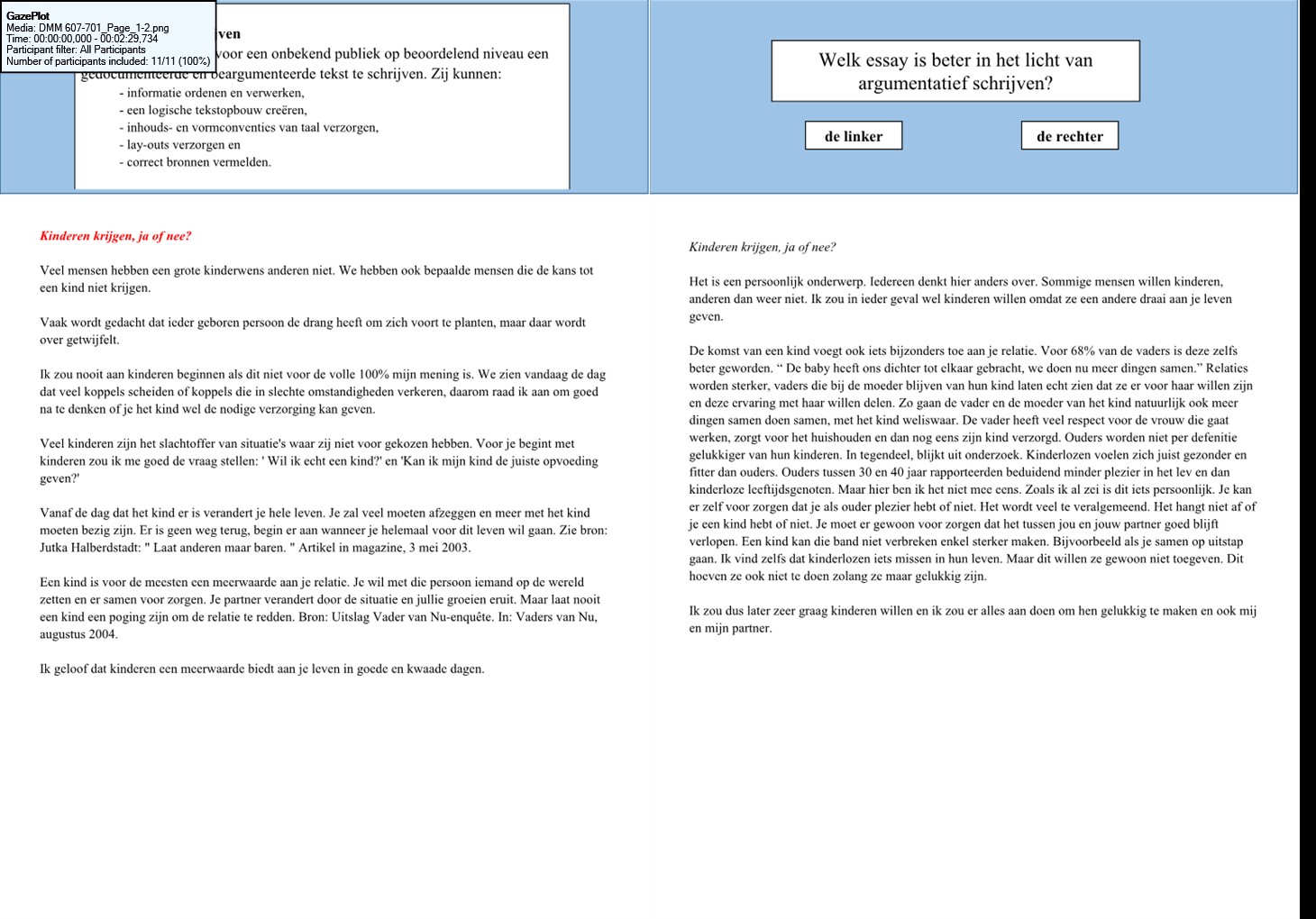

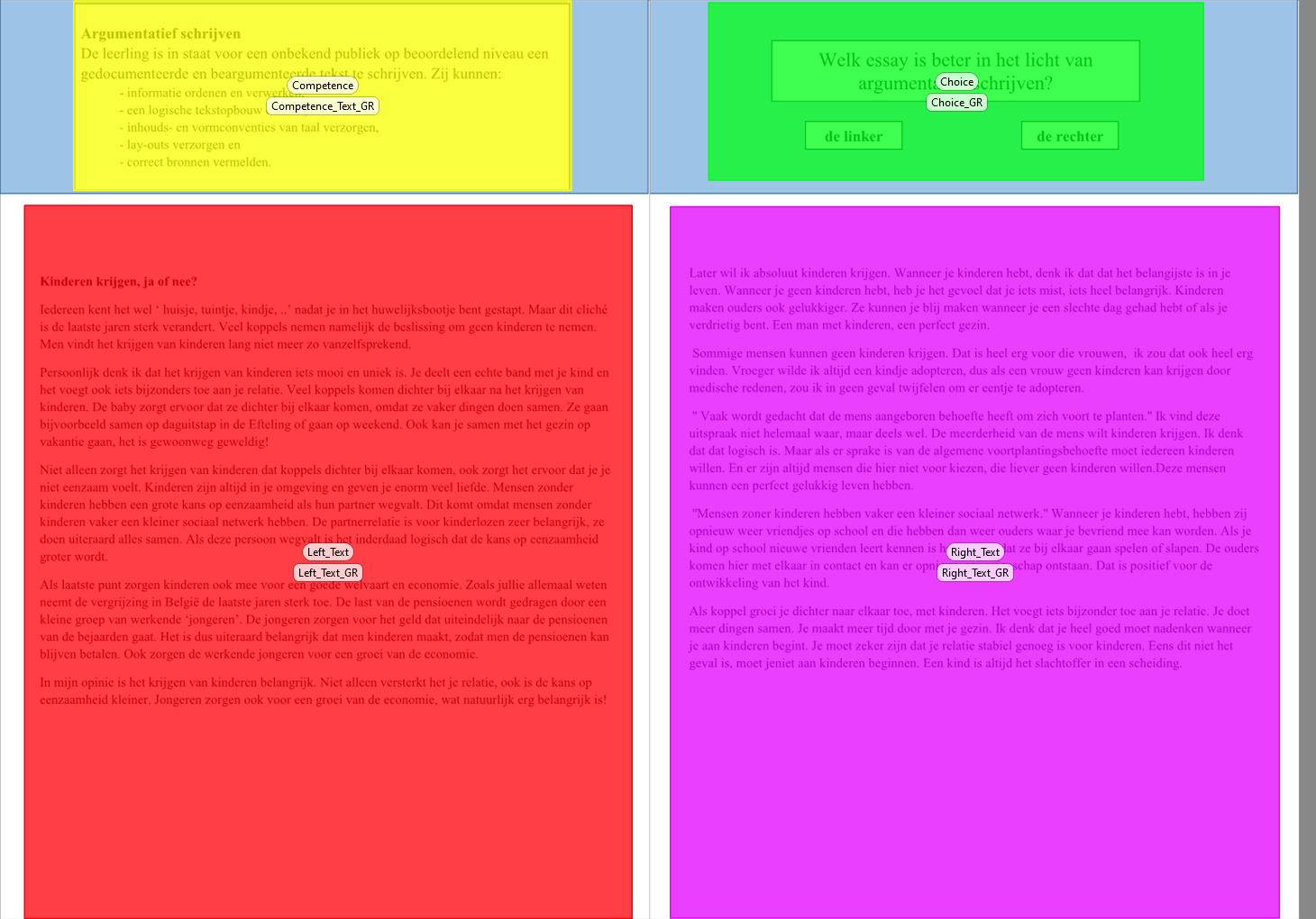

class: center, middle <style> .center2 { margin: 0; position: absolute; top: 50%; left: 50%; -ms-transform: translate(-50%, -50%); transform: translate(-50%, -50%); } </style> <style type="text/css"> .right-column{ padding-top: 0; } .remark-code, .remark-inline-code { font-family: 'Source Code Pro', 'Lucida Console', Monaco, monospace; font-size: 90%; } </style> <div class="my-logo-left"> <img src="img/UA-eng-hor-1-RGB.jpg" width="90%"/> </div> # Workshop: Bayesian analyses in R .font160[ .SW-greenD[Sven De Maeyer] ] [University of Antwerp / Faculty of Social Sciences] .font80[ .UA-red[ 13/09/2021 ] ] --- background-image: url(despicable_me2.jpeg) background-size: contain class: inverse ## .yellow[About me] - University of Antwerp; Faculty of Social Sciences; dept. Training and Education Sciences - phd on methodological issues in research on School Effectiveness - research on a broad range of topics (e.g. psychometrics, writing research, learning strategies, education for sustainable development, linguistics, speech development of impaired children, ...) - my main focus shifted recently to Comparative Judgement and Learning from comparisons - since a year or 2 discovering Bayesian Statistics & `brms` <br> <br> <br> Blog: https://svendemaeyer.netlify.app/ Twitter: @svawa github: https://github.com/Sdemaeyer2 --- background-image: url(we_like_you_too.jpg) background-size: contain class: inverse, center, bottom ## .green[What about you?] .black[Who has some experience with Bayesian analyses?] .black[... with `Stan`?] .black[ ... with `brms`?] <br> <br> <br> --- name: topicslide # Topics 1. Aims of the workshop & some practical stuff ([let's go there](#aims)) 2. What's Bayesian? ([let's go there](#Whats_Bayesian)) 3. Why .UA-red[`brms`]? ([let's go there](#why_brms)) 4. .UA-red[`brms`] basic example ([let's go there](#brms_example)) 5. Let's get practical: .SW-greenD[the example data] ([let's go there](#example_data)) 6. Let's slightly increase complexity ([let's go there](#add_complexity)) --- class: inverse-green, center, middle name: aims # 1. Aims & practical stuff .footnote[[<i> get me back to the topic slide</i>](#topicslide)] --- ## My aims are ... <br> <br> - to give some background on Bayesian statistics without getting too technical (I hope) - to get you familiar with a basic workflow for a Bayesian analysis making use of .UA-red[ `brms`] and other great packages - to let you apply what's learned on your data (so it's hands-on) - to supply you with .UA-red[ `R code`] that may inspire - to share some great sources --- ## All material is shared... <br> On Github there is a dedicated repository where you'll find: - the Rmarkdown file for the slides - saved models from the slides (some take too long to estimate 'live') - data used for the exercises <br> The Github repository can be accessed using the following link: [https://github.com/Sdemaeyer2/Turku_brms_2021] <br> The slides are on my Talks site [https://slides-sdemaeyer.netlify.app/] --- ## Zoom <br> Recordings <br> Please ask questions and interfere! Just give a shout! <br> Stuck with some exercises? `\(\rightarrow\)` Share your screen and I will try to help --- ##Your own data Day 2: .center2[ - some dedicated time to apply stuff to your own data - be prepared: - think about some (simple) models (and possible priors...) - prepare your data to avoid a lot of data issues on day 2 - for the brave: try some modelling at home before day 2 ] --- name: Prerequisites ##Prerequisites - be familiar with R - have `CmdStan` & `cmdstanr` up and running (see https://mc-stan.org/cmdstanr/articles/cmdstanr.html) - have `brms` installed - have `tidyverse` installed I will often use the .SW-greenD[functional programming] style making use of the pipe operator (works if `tidyverse` is loaded): ```r A %>% B ``` This reads as .UA-red[take A] and then .UA-red[do B] An example: ```r c(1,2,3,4,5) %>% mean() ``` reads as .UA-red[create a vector of the values 1, 2, 3, 4 and 5] and then .UA-red[calculate the mean] Want to learn dplyr by nice GIFS: [hop to this great tweet feed](#tweet_dplyr) --- class: inverse-green, center, middle name: Whats_Bayesian ## What's Bayesian inference about? .footnote[[<i> get me back to the topic slide</i>](#topicslide)] --- ## First things first... <br> <br> What is <b>.SW-greenD[statistical inference]</b> about? <br> <br> In your definition, what is a <b>.SW-greenD[statistical model]</b>? <br> <br> What are <b>.SW-greenD[parameters]</b>? --- ## Frequentist vs. Bayesian inference .pull-left[ <img src="figures_inferences_NHST.jpeg" width="110%" height="110%" /> ] .pull-right[ <img src="figures_inferences_Bayes.jpeg" width="110%" height="110%" /> ] --- ## To get us started... .left-column[ <img src="Van_Helsing_1931.png" width="110%" height="110%" /> ] .right-column[ - prof. dr. Von Helsing (a famous Vampire Hunter) - How many Vampires are out there? - Time for his famous .UA-red[Vampire Test] - Sample of 200 citizens - Result: 10.5% = Vampire - Ok, but what about the population? ] --- ## What a Frequentist approach can tell us Make a Confidence Interval for the 10.5%: - Estimate the sampling error: * sampling error: 0.0217 - Calculate upper and lower limit of the 95% CI: * lower: 0.0625 * upper: 0.1475 - Inference: > In 95% of the samples (of sample size 200) we would get a percentage of vampires varying somewhere between 6.25% and 14.75% --- ## The Bayesian approach .left-column[ Before Von Helsing gathers data he had no knowledge at all! If we visualize this 'prior knowledge' it could look like this.] .right-column[ <img src="Part1_files/figure-html/unnamed-chunk-9-1.png" width="576" /> ] --- ## The Bayesian approach .left-column[ Von Helsing starts testing ... First tested person is NOT a Vampire! So, 100% of Vampires is already less probable. Time to adjust our knowledge ] .right-column[ <img src="Part1_files/figure-html/unnamed-chunk-10-1.png" width="576" /> ] --- ## The Bayesian approach .left-column[ After testing the whole sample of 200 citizens, Von Helsing's knowledge is updated: ] .right-column[ <img src="Part1_files/figure-html/unnamed-chunk-11-1.png" width="576" /> ] --- ## The Bayesian approach .left-column[ We get a probability for each possible percentage of vampires. * plot it in a .UA-red[probability density] plot * summarize: e.g. 90% most probable values are situated between 7.5% and 14.8% ] .right-column[ <img src="Part1_files/figure-html/unnamed-chunk-12-1.png" width="576" /> ] --- ## Advantages & Disadvantages of Bayesian analyses <br> Advantages: - Natural approach to express uncertainty - Ability to incorporate prior knowledge - Increased model flexibility - Full posterior distribution of the parameters - Natural propagation of uncertainty <br> Disadvantage: - Slow speed of model estimation - Some reviewers don't understand you (<i>"give me the p-value"</i>) .footnote[[*] Slight adaptation of a slide from Paul Bürkner's presentation available on YouTube [https://www.youtube.com/watch?v=FRs1iribZME] ] --- ## Bayesian Theorem .pull-left[ <img src="prior_data_posterior.png" width="50%" height="50%" /> ] .pull-right[ $$ P(\theta|data) = \frac{P(data|\theta)P(\theta)}{P(data)} $$ <br> with - `\(P(data|\theta)\)` : the .UA-red[likelihood] of the data given our model `\(\theta\)` - `\(P(\theta)\)` : our .UA-red[prior] belief about model parameters - `\(P(\theta|data)\)`: the .UA-red[posterior] probability about model parameters ] .footnote[meme from https://twitter.com/ChelseaParlett/status/1421291716229746689?s=20] --- ## Likelihood .left-column[ `\(P(data|\theta)\)` sometimes also written as `\(L(\theta|data)\)` ] .right-colomn[ <br> Actually this is our .UA-red[<b>model</b>] part <br> with `\(\theta\)` being the model and all parameters in the model ] --- ## Likelihood .left-column[ Example: .SW-greenD[<i>What if we want to model how fast people can run a 1OK?</i>] <img src="running.jpg" width="80%" height="80%" /> ] .right-column[ Data = 10 observations (Running times for 10K in minutes) ``` ## [1] 52 54 58 48 41 49 72 53 64 62 ``` The model (<i>normal distribution</i>): `\(y_i \backsim N(\mu, \sigma)\)` The parameter values that maximize the likelihood of our data: .footnotessize[ ```r Mean_RT <- mean(RT, na.rm = T) Mean_RT ``` ``` ## [1] 55.3 ``` ```r Sd_RT <- sd(RT, na.rm = T) Sd_RT ``` ``` ## [1] 8.957306 ``` ] ] --- ## Prior .center2[ .SW-greenD[ Expression of our prior knowledge (belief) about probable parameter values as a probability density function ] <br> <br> ><i>"For Bayesians, the data are treated as fixed and the parameters vary. [...] Bayes' rule tells us that to calculate the posterior probability distribution we must combine a likelihood with a prior probability distribution over parameter values." </i> <br> (Lambert, 2018, p.88) ] --- ## Prior .left-column[ Example: .SW-greenD[<i>What if we want to model how fast people can run a 1OK?</i>] <img src="running.jpg" width="80%" height="80%" /> ] .right-column[ `\(y_i \backsim N(\mu, \sigma)\)` Express our prior beliefs about .UA-red[ `\(\mu\)` ] and .UA-red[ `\(\sigma\)` ] .UA-blue[ > .Large[🤔] <i> Please share your thoughts? What are possible values of the population average and standard deviation for our example?] </i> ] --- ## Prior .pull-left[ ### Uninformative / Vague When .SW-greenD[objectivity] is crucial and you want <i> .SW-greenD[let the data speak for itself...] </i> ] .pull-right[ ### Informative When including significant information is crucial - previously collected data - results from former research/analyses - data of another source - theoretical considerations ] --- ## Prior .left-column[ Example: .SW-greenD[<i>What if we want to model how fast people can run a 1OK?</i>] <img src="running.jpg" width="80%" height="80%" /> ] .right-column[ <b>Vague priors for `\(\mu\)` </b> <img src="Part1_files/figure-html/unnamed-chunk-19-1.png" width="504" /> ] --- ## Say we have following priors ```r par(mfrow=c(2,2)) curve( dnorm( x , 50 , 20 ) , from=1 , to=200 ,xlab="mu", main="Prior for mu") curve( dunif( x , 1 , 40 ) , from=-10 , to=50 ,xlab="sigma", main="Prior for sigma") ``` <img src="Part1_files/figure-html/unnamed-chunk-20-1.png" width="504" /> --- ## Calculate the Posterior by hand (aka .UA-red[grid approximation]) .pull-left[ ```r # sample some values for mu and sigma mu.list <- seq(from = 30, to = 90, length.out=200) sigma.list <- seq(from = 2, to = 30, length.out = 200) post <- expand.grid(mu = mu.list, sigma = sigma.list) # Calculate the loglikelihood of the data # for each parameter value post$LL <- sapply(1:nrow(post), function(i) sum( dnorm( RT , mean=post$mu[i] , sd=post$sigma[i] , log=TRUE ) ) ) ``` ] .pull-right[ ```r # Calculate posterior as product # of LL and Prior # but you see a '+ sign' # because we put everything on the # log scale # (to avoid getting zero's # due to rounding in R) post$prod <- post$LL + dnorm(post$mu, 50 , 10 , TRUE) + dunif(post$sigma , 1 , 40 , TRUE) # Re-scale the posterior # to the probability scale post$prob <- exp(post$prod - max(post$prod) ) ``` ] --- ## Visualise the posterior distribution <i> Make a contour plot </i> .pull-left[ ```r # Sampling going on # So for reproducibility set.seed(1975) post %>% # sample 10000 rows # with replacement # higher prob higher prob to # be sampled sample_n(size = 10000, replace = TRUE, weight = prob) %>% # create the plot ggplot(aes(x = mu, y = sigma)) + geom_density_2d_filled() + theme_minimal() ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-24-1.png" width="432" /> ] --- ## Visualise the posterior distributions <i> Or sample from posterior and plot simple density plots </i> ```r sample.rows <- sample(1:nrow(post), size=10000, replace=TRUE, prob=post$prob) sample.mu <- post$mu[sample.rows] sample.sigma <- post$sigma[sample.rows] ``` .pull-left[ ```r plot(density(sample.mu),main="mu") ``` <img src="Part1_files/figure-html/unnamed-chunk-26-1.png" width="288" /> ] .pull-right[ ```r plot(density(sample.sigma),main="sigma") ``` <img src="Part1_files/figure-html/unnamed-chunk-27-1.png" width="288" /> ] --- class: inverse-green, center, middle name: why_brms ## 3. Why `brms`? .footnote[[<i> get me back to the topic slide</i>](#topicslide)] --- ## Imagine A 'simple' linear model <br> `$$\begin{aligned} & RT_{i} \sim N(\mu,\sigma_{e_{i}})\\ & \mu = \beta_0 + \beta_1*\text{Weigth}_{i} + \beta_2*\text{Height}_{i} + \beta_3*\text{Age}_{i} + \beta_4*\text{WeeklyTrainingHours}_{i} + \beta_5*\text{Gender}_{i} \\ \end{aligned}$$` <br> So you can get a REALLY LARGE number of parameters! --- ## Markov Chain Monte Carlo - Why? Complex models `\(\rightarrow\)` Large number of parameters `\(\rightarrow\)` exponentional number of combinations! <br> Posterior gets unsolvable by grid approximation <br> We will approximate the 'joint posterior' .SW-greenD[by 'smart' sampling] <br> Samples of combinations of parametervalues are drawn <br> BUT: .SW-greenD[samples will not be random!] --- ## MCMC - demonstrated .center2[ Following link brings you to an interactive tool that let's you get the basic idea behind MCMC sampling: https://chi-feng.github.io/mcmc-demo/app.html#HamiltonianMC,standard ] --- ## Side-step: conjugate priors Some prior probability distributions can be <i>'more easely'</i> combined with a certain likelihood! These are called .SW-greenD[<b>conjugate</b>] priors <b>PRO: </b> You can actually analytically calculate the posterior. So: no need for all the sampling and approximation of the posterior. A list of conjugate likelihood-prior pairs: https://en.wikipedia.org/wiki/Conjugate_prior <b>BUT: </b> ><i>"Simplicity comes with a cost! In most real-life examples of inference, the contraint of choosing likelihood-prior conjugate pairs is too restrictive and can lead us to use models that inadequately capture the variability in the data. (Lambert, 2018, p. 209)" --- ## Software <br> - different dedicated software/packages are available: JAGS / BUGS / Stan <br> - most powerful is .UA-red[Stan]! Specifically the *Hamiltonian Monte Carlo* algorithm makes it the best choice at the moment <br> - .UA-red[Stan] is a probabilistic programming language that uses C++ --- ## Example of Stan code .scriptsize[ ``` ## // generated with brms 2.14.4 ## functions { ## } ## data { ## int<lower=1> N; // total number of observations ## vector[N] Y; // response variable ## int prior_only; // should the likelihood be ignored? ## } ## transformed data { ## } ## parameters { ## real Intercept; // temporary intercept for centered predictors ## real<lower=0> sigma; // residual SD ## } ## transformed parameters { ## } ## model { ## // likelihood including all constants ## if (!prior_only) { ## // initialize linear predictor term ## vector[N] mu = Intercept + rep_vector(0.0, N); ## target += normal_lpdf(Y | mu, sigma); ## } ## // priors including all constants ## target += normal_lpdf(Intercept | 500,50); ## target += student_t_lpdf(sigma | 3, 0, 68.8) ## - 1 * student_t_lccdf(0 | 3, 0, 68.8); ## } ## generated quantities { ## // actual population-level intercept ## real b_Intercept = Intercept; ## } ``` ] ---  --- class: inverse-green, center, middle name: brms_example ## 4. `brms` basic example .footnote[[<i> get me back to the topic slide</i>](#topicslide)] --- ## `brms` syntax Very very similar to `lme4` and in line with typical R-style writing up of a model ... .pull-left[ `lme4` ```r Model <- lmer( y ~ x1 + x2 + (1|Group), data = Data, ... ) ``` ] .pull-left[ `brms` ```r Model <- brm( y ~ x1 + x2 + (1|Group), data = Data, * family = "gaussian", * backend = "cmdstanr" ... ) ``` ] Notice: - `family = "gaussian"` indicates the "likelihood" function we will use - `backend = "cmdstanr"` indicates the way we want to interact with Stan and C++ --- ## Let's retake the example on running .left-column[ Example: .SW-greenD[<i>What if we want to model how fast people can run a 1OK?</i>] <img src="running.jpg" width="80%" height="80%" /> ] .right-column[ The simplest model looked like: $$ RT_i \sim N(\mu,\sigma_e) $$ In `brms` this model is: ```r RT <- c( 52, 54, 58, 48, 41, 49, 72, 53, 64, 62 ) # First make a dataset from our RT vector DataRT <- data_frame(RT) Mod_RT1 <- brm( RT ~ 1, # We only model an intercept data = DataRT, backend = "cmdstanr", seed = 1975 ) ``` .UA-red[<b> .Large[🏃] Try it yourself and run the model ... (don't forget to load the necessary packages: `brms` & `tidyverse`) </b> ] ] --- <img src="RT_Model1_Estimation.jpg" width="60%" height="60%" /> --- ## But ... .center2[ <img src="MCMC_Skeleton.jpg" width="50%" height="50%" /> ] --- ## So For the workshop all models are in the .SW-greenD[models] folder You can normally read model information with `readRDS` Here's an example: ```r Mod_RT1 <- readRDS(here("models", "Mod_RT1.RDS")) ``` This model information was saved with the `saveRDS` function: ```r saveRDS(Mod_RT1, here("models", "Mod_RT1.RDS")) ``` .footnote[Notice that I use the package `here` allowing me to access files anywhere in the RStudio Projects folder using the `here( )` commando (no fuzz with paths on your computer)] --- # MCMC = sampling .left-column[ MCMC results will differ each time run <br> Of course, not a lot if your model is good! <br> So, what if you want to be reproducible? ] .right-column[ ```r Mod_RT1 <- brm( RT ~ 1, # We only model an intercept data = DataRT, backend = "cmdstanr", * seed = 1975 # make sure we get the same results each time :-) ) ``` ] --- ## Good old `summary( )` function ```r summary(Mod_RT1) ``` ``` ## Family: gaussian ## Links: mu = identity; sigma = identity ## Formula: RT ~ 1 ## Data: DataRT (Number of observations: 10) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1; ## total post-warmup samples = 4000 ## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS *## Intercept 55.10 2.82 49.68 60.90 1.00 2000 2180 ## ## Family Specific Parameters: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS *## sigma 9.47 2.32 6.17 15.08 1.00 2445 2318 ## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS ## and Tail_ESS are effective sample size measures, and Rhat is the potential ## scale reduction factor on split chains (at convergence, Rhat = 1). ``` --- ## Samples & chains? ``` ## Family: gaussian ## Links: mu = identity; sigma = identity ## Formula: RT ~ 1 ## Data: DataRT (Number of observations: 10) *## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1; *## total post-warmup samples = 4000 ## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## Intercept 55.10 2.82 49.68 60.90 1.00 2000 2180 ## ## Family Specific Parameters: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## sigma 9.47 2.32 6.17 15.08 1.00 2445 2318 ## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS ## and Tail_ESS are effective sample size measures, and Rhat is the potential ## scale reduction factor on split chains (at convergence, Rhat = 1). ``` <i>By default `brms` sets .SW-greenD[4 chains of 2000 iterations] of which .SW-greenD[1000 iterations/chain are warm-up] </i> --- ## Samples & chains: what are 'burn-in' iterations .center2[ <img src="mcmc_fases_p1.png" width="100%" height="100%" /> ] --- ## Samples & chains: what are 'burn-in' iterations .center2[ <img src="mcmc_fases_p2.png" width="100%" height="100%" /> ] --- ## Samples & chains: what are 'burn-in' iterations .center2[ <img src="mcmc_fases_p3.png" width="100%" height="100%" /> ] --- ## Good ald `plot( )` function .pull-left[ ```r plot(Mod_RT1) ``` <img src="Part1_files/figure-html/unnamed-chunk-44-1.png" width="432" /> ] .pull-right[ .UA-red[Left panel] the posterior distributions (<i>later we will see other more informative ways to plot the information in the posterior</i>) .UA-red[Right panel] the convergence of the each parameter `\(\rightarrow\)` should look like a caterpillar <img src="catterpillar.jpeg" width="50%" height="50%" /> ] --- ## Model Convergence .pull-left[ <img src="Vethari_paper.jpg" width="99%" height="99%" /> ] .pull-right[ - `\(\widehat R\)` < 1.015 for each parameter estimate - at least 4 chains are recommended - Effective Sample Size (ESS) > 400 to rely on `\(\widehat R\)` ] --- ## Let's inspect the output again ``` ## Family: gaussian ## Links: mu = identity; sigma = identity ## Formula: RT ~ 1 ## Data: DataRT (Number of observations: 10) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1; ## total post-warmup samples = 4000 ## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## Intercept 55.10 2.82 49.68 60.90 1.00 2000 2180 ## ## Family Specific Parameters: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## sigma 9.47 2.32 6.17 15.08 1.00 2445 2318 ## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS ## and Tail_ESS are effective sample size measures, and Rhat is the potential ## scale reduction factor on split chains (at convergence, Rhat = 1). ``` --- class: inverse-green, center, middle name: example_data ## 5. Let's get practical: the example dataset .footnote[[<i> get me back to the topic slide</i>](#topicslide)] --- ## Judging 'argumentative texts'  --- ## Procedure - 26 high school teachers (Dutch) voluntary participated - each did 10 comparisons of 2 argumentative texts from 10th graders - 3 batches of comparisons with random allocation of judges to one of the batches - all batches similar composition of comparisons regarding the characteristics of the pairs; the pairs, however not the same - Tobii TX300 dark pupil eye-tracker with a 23-inch TFT monitor (max. resolution of 1920 x 1080 pixels) - data sampled binocularly at 300 Hz --- ## AOI's  --- ## The data <div id="htmlwidget-0af9996899d34f003f8e" style="width:100%;height:auto;" class="datatables html-widget"></div> <script type="application/json" data-for="htmlwidget-0af9996899d34f003f8e">{"x":{"filter":"none","fillContainer":false,"data":[["1","2","3","4","5","6","7","8","9","10","11","12","13","14","15","16","17","18","19","20","21","22","23","24","25","26","27","28","29","30","31","32","33","34","35","36","37","38","39","40","41","42","43","44","45","46","47","48","49","50","51","52","53","54","55","56","57","58","59","60","61","62","63","64","65","66","67","68","69","70","71","72","73","74","75","76","77","78","79","80","81","82","83","84","85","86","87","88","89","90","91","92","93","94","95","96","97","98","99","100","101","102","103","104","105","106","107","108","109","110","111","112","113","114","115","116","117","118","119","120","121","122","123","124","125","126","127","128","129","130","131","132","133","134","135","136","137","138","139","140","141","142","143","144","145","146","147","148","149","150","151","152","153","154","155","156","157","158","159","160","161","162","163","164","165","166","167","168","169","170","171","172","173","174","175","176","177","178","179","180","181","182","183","184","185","186","187","188","189","190","191","192","193","194","195","196","197","198","199","200","201","202","203","204","205","206","207","208","209","210","211","212","213","214","215","216","217","218","219","220","221","222","223","224","225","226","227","228","229","230","231","232","233","234","235","236","237","238","239","240","241","242","243","244","245","246","247","248","249","250","251","252","253","254","255","256","257","258","259","260","261","262","263","264","265","266","267","268","269","270","271","272","273","274","275","276","277","278","279","280","281","282","283","284","285","286","287","288","289","290","291","292","293","294","295","296","297","298","299","300","301","302","303","304","305","306","307","308","309","310","311","312","313","314","315","316","317","318","319","320","321","322","323","324","325","326","327","328","329","330","331","332","333","334","335","336","337","338","339","340","341","342","343","344","345","346","347","348","349","350","351","352","353","354","355","356","357","358","359","360","361","362","363","364","365","366","367","368","369","370","371","372","373","374","375","376","377","378","379","380","381","382","383","384","385","386","387","388","389","390","391","392","393","394","395","396","397","398","399","400","401","402","403","404","405","406","407","408","409","410","411","412","413","414","415","416","417","418","419","420","421","422","423","424","425","426","427","428","429","430","431","432","433","434","435","436","437","438","439","440","441","442","443","444","445","446","447","448","449","450","451","452","453","454","455","456","457","458","459","460","461","462","463","464","465","466","467","468","469","470","471","472","473","474","475","476","477","478","479","480","481","482","483","484","485","486","487","488","489","490","491","492","493","494","495","496","497","498","499","500","501","502","503","504","505","506","507","508","509","510","511","512","513","514","515","516","517","518","519","520","521","522","523","524","525","526","527","528","529","530","531","532","533","534","535","536","537","538","539","540","541","542","543","544","545","546","547","548","549","550","551","552","553","554","555","556","557","558","559","560","561","562","563","564","565","566","567","568","569","570","571","572","573","574","575","576","577","578","579","580","581","582","583","584","585","586","587","588","589","590","591","592","593","594","595","596","597","598","599","600","601","602","603","604","605","606","607","608","609","610","611","612","613","614","615","616","617","618","619","620","621","622","623","624","625","626","627","628","629","630","631","632","633","634","635","636","637","638","639","640","641","642","643","644","645","646","647","648","649","650","651","652","653","654","655","656","657","658","659","660","661","662","663","664","665","666","667","668","669","670","671","672","673","674","675","676","677","678","679","680","681","682","683","684","685","686","687","688","689","690","691","692","693","694","695","696","697","698","699","700","701","702","703","704","705","706","707","708","709","710","711","712","713","714","715","716","717","718","719","720","721","722","723","724","725","726","727","728","729","730","731","732","733","734","735","736","737","738","739","740","741","742","743","744","745","746","747","748","749","750","751","752","753","754","755","756","757","758","759","760","761","762","763","764","765","766","767","768","769","770","771","772","773","774","775","776","777","778","779","780","781","782","783","784","785","786","787","788","789","790","791","792","793","794","795","796","797","798","799","800","801","802","803","804","805","806","807","808","809","810","811","812","813","814","815","816","817","818","819","820","821","822","823","824","825","826","827","828","829","830","831","832","833","834","835","836","837","838","839","840","841","842","843","844","845","846","847","848","849","850","851","852","853","854","855","856","857","858","859","860","861","862","863","864","865","866","867","868","869","870","871","872","873","874","875","876","877","878","879","880","881","882","883","884","885","886","887","888","889","890","891","892","893","894","895","896","897","898","899","900","901","902","903","904","905","906","907","908","909","910","911","912","913","914","915","916","917","918","919","920","921","922","923","924","925","926","927","928","929","930","931","932","933","934","935","936","937","938","939","940","941","942","943","944","945","946","947","948","949","950","951","952","953","954","955","956","957","958","959","960","961","962","963","964","965","966","967","968","969","970","971","972","973","974","975","976","977","978","979","980","981","982","983","984","985","986","987","988","989","990","991","992","993","994","995","996","997","998","999","1000","1001","1002","1003","1004","1005","1006","1007","1008","1009","1010","1011","1012","1013","1014","1015","1016","1017","1018","1019","1020","1021","1022","1023","1024","1025","1026","1027","1028","1029","1030","1031","1032","1033","1034","1035","1036","1037","1038","1039","1040","1041","1042","1043","1044","1045","1046","1047","1048","1049","1050","1051","1052","1053","1054","1055","1056","1057","1058","1059","1060","1061","1062","1063","1064","1065","1066","1067","1068","1069","1070","1071","1072","1073","1074","1075","1076","1077","1078","1079","1080","1081","1082","1083","1084","1085","1086","1087","1088","1089","1090","1091","1092","1093","1094","1095","1096","1097","1098","1099","1100","1101","1102","1103","1104","1105","1106","1107","1108","1109","1110","1111","1112","1113","1114","1115","1116","1117","1118","1119","1120","1121","1122","1123","1124","1125","1126","1127","1128","1129","1130","1131","1132","1133","1134","1135","1136","1137","1138","1139","1140","1141","1142","1143","1144","1145","1146","1147","1148","1149","1150","1151","1152","1153","1154","1155","1156","1157","1158","1159","1160","1161","1162","1163","1164","1165","1166","1167","1168","1169","1170","1171","1172","1173","1174","1175","1176","1177","1178","1179","1180","1181","1182","1183","1184","1185","1186","1187","1188","1189","1190","1191","1192","1193","1194","1195","1196","1197","1198","1199","1200","1201","1202","1203","1204","1205","1206","1207","1208","1209","1210","1211","1212","1213","1214","1215","1216","1217","1218","1219","1220","1221","1222","1223","1224","1225","1226","1227","1228","1229","1230","1231","1232","1233","1234","1235","1236","1237","1238","1239","1240","1241","1242","1243","1244","1245","1246","1247","1248","1249","1250","1251","1252","1253","1254","1255","1256","1257","1258","1259","1260","1261","1262","1263","1264","1265","1266","1267","1268","1269","1270","1271","1272","1273","1274","1275","1276","1277","1278","1279","1280","1281","1282","1283","1284","1285","1286","1287","1288","1289","1290","1291","1292","1293","1294","1295","1296","1297","1298","1299","1300","1301","1302","1303","1304","1305","1306","1307","1308","1309","1310","1311","1312","1313","1314","1315","1316","1317","1318","1319","1320","1321","1322","1323","1324","1325","1326","1327","1328","1329","1330","1331","1332","1333","1334","1335","1336","1337","1338","1339","1340","1341","1342","1343","1344","1345","1346","1347","1348","1349","1350","1351","1352","1353","1354","1355","1356","1357","1358","1359","1360","1361","1362","1363","1364","1365","1366","1367","1368","1369","1370","1371","1372","1373","1374","1375","1376","1377","1378","1379","1380","1381","1382","1383","1384","1385","1386","1387","1388","1389","1390","1391","1392","1393","1394","1395","1396","1397","1398","1399","1400","1401","1402","1403","1404","1405","1406","1407","1408","1409","1410","1411","1412","1413","1414","1415","1416","1417","1418","1419","1420","1421","1422","1423","1424","1425","1426","1427","1428","1429","1430","1431","1432","1433","1434","1435","1436","1437","1438","1439","1440","1441","1442","1443","1444","1445","1446","1447","1448","1449","1450","1451","1452","1453","1454","1455","1456","1457","1458","1459","1460","1461","1462","1463","1464","1465","1466","1467","1468","1469","1470","1471","1472","1473","1474","1475","1476","1477","1478","1479","1480","1481","1482","1483","1484","1485","1486","1487","1488","1489","1490","1491","1492","1493","1494","1495","1496","1497","1498","1499","1500","1501","1502","1503","1504","1505","1506","1507","1508","1509","1510","1511","1512","1513","1514","1515","1516","1517","1518","1519","1520","1521","1522","1523","1524","1525","1526","1527","1528","1529","1530","1531","1532","1533","1534","1535","1536","1537","1538","1539","1540","1541","1542","1543","1544","1545","1546","1547","1548","1549","1550","1551","1552","1553","1554","1555","1556","1557","1558","1559","1560","1561","1562","1563","1564","1565","1566","1567","1568","1569","1570","1571","1572","1573","1574","1575","1576","1577","1578","1579","1580","1581","1582","1583","1584","1585","1586","1587","1588","1589","1590","1591","1592","1593","1594","1595","1596","1597","1598","1599","1600","1601","1602","1603","1604","1605","1606","1607","1608","1609","1610","1611","1612","1613","1614","1615","1616","1617","1618","1619","1620","1621","1622","1623","1624","1625","1626","1627","1628","1629","1630","1631","1632","1633","1634","1635","1636","1637","1638","1639","1640","1641","1642","1643","1644","1645","1646","1647","1648","1649","1650","1651","1652","1653","1654","1655","1656","1657","1658","1659","1660","1661","1662","1663","1664","1665","1666","1667","1668","1669","1670","1671","1672","1673","1674","1675","1676","1677","1678","1679","1680","1681","1682","1683","1684","1685","1686","1687","1688","1689","1690","1691","1692","1693","1694","1695","1696","1697","1698","1699","1700","1701","1702","1703","1704","1705","1706","1707","1708","1709","1710","1711","1712","1713","1714","1715","1716","1717","1718","1719","1720","1721","1722","1723","1724","1725","1726","1727","1728","1729","1730","1731","1732","1733","1734","1735","1736","1737","1738","1739","1740","1741","1742","1743","1744","1745","1746","1747","1748","1749","1750","1751","1752","1753","1754","1755","1756","1757","1758","1759","1760","1761","1762","1763","1764","1765","1766","1767","1768","1769","1770","1771","1772","1773","1774","1775","1776","1777","1778","1779","1780","1781","1782","1783","1784","1785","1786","1787","1788","1789","1790","1791","1792","1793","1794","1795","1796","1797","1798","1799","1800","1801","1802","1803","1804","1805","1806","1807","1808","1809","1810","1811","1812","1813","1814","1815","1816","1817","1818","1819","1820","1821","1822","1823","1824","1825","1826","1827","1828","1829","1830","1831","1832","1833","1834","1835","1836","1837","1838","1839","1840","1841","1842","1843","1844","1845","1846","1847","1848","1849","1850","1851","1852","1853","1854","1855","1856","1857","1858","1859","1860","1861","1862","1863","1864","1865","1866","1867","1868","1869","1870","1871","1872","1873","1874","1875","1876","1877","1878","1879","1880","1881","1882","1883","1884","1885","1886","1887","1888","1889","1890","1891","1892","1893","1894","1895","1896","1897","1898","1899","1900","1901","1902","1903","1904","1905","1906","1907","1908","1909","1910","1911","1912","1913","1914","1915","1916","1917","1918","1919","1920","1921","1922","1923","1924","1925","1926","1927","1928","1929","1930","1931","1932","1933","1934","1935","1936","1937","1938","1939","1940","1941","1942","1943","1944","1945","1946","1947","1948","1949","1950","1951","1952","1953","1954","1955","1956","1957","1958","1959","1960","1961","1962","1963","1964","1965","1966","1967","1968","1969","1970","1971","1972","1973","1974","1975","1976","1977","1978","1979","1980","1981","1982","1983","1984","1985","1986","1987","1988","1989","1990","1991","1992","1993","1994","1995","1996","1997","1998","1999","2000","2001","2002","2003","2004","2005","2006","2007","2008","2009","2010","2011","2012","2013","2014","2015","2016","2017","2018","2019","2020","2021","2022","2023","2024","2025","2026","2027","2028","2029","2030","2031","2032","2033","2034","2035","2036","2037","2038","2039","2040","2041","2042","2043","2044","2045","2046","2047","2048","2049","2050","2051","2052","2053","2054","2055","2056","2057","2058","2059","2060","2061","2062","2063","2064","2065","2066","2067","2068","2069","2070","2071","2072","2073","2074","2075","2076","2077","2078","2079","2080","2081","2082","2083","2084","2085","2086","2087","2088","2089","2090","2091","2092","2093","2094","2095","2096","2097","2098","2099","2100","2101","2102","2103","2104","2105","2106","2107","2108","2109","2110","2111","2112","2113","2114","2115","2116","2117","2118","2119","2120","2121","2122","2123","2124","2125","2126","2127","2128","2129","2130","2131","2132","2133","2134","2135","2136","2137","2138","2139","2140","2141","2142","2143","2144","2145","2146","2147","2148","2149","2150","2151","2152","2153","2154","2155","2156","2157","2158","2159","2160","2161","2162","2163","2164","2165","2166","2167","2168","2169","2170","2171","2172","2173","2174","2175","2176","2177","2178","2179","2180","2181","2182","2183","2184","2185","2186","2187","2188","2189","2190","2191","2192","2193","2194","2195","2196","2197","2198","2199","2200","2201","2202","2203","2204","2205","2206","2207","2208","2209","2210","2211","2212","2213","2214","2215","2216","2217","2218","2219","2220","2221","2222","2223","2224","2225","2226","2227","2228","2229","2230","2231","2232","2233","2234","2235","2236","2237","2238","2239","2240","2241","2242","2243","2244","2245","2246","2247","2248","2249","2250","2251","2252","2253","2254","2255","2256","2257","2258","2259","2260","2261","2262","2263","2264","2265","2266","2267","2268","2269","2270","2271","2272","2273","2274","2275","2276","2277","2278","2279","2280","2281","2282","2283","2284","2285","2286","2287","2288","2289","2290","2291","2292","2293","2294","2295","2296","2297","2298","2299","2300","2301","2302","2303","2304","2305","2306","2307","2308","2309","2310","2311","2312","2313","2314","2315","2316","2317","2318","2319","2320","2321","2322","2323","2324","2325","2326","2327","2328","2329","2330","2331","2332","2333","2334","2335","2336","2337","2338","2339","2340","2341","2342","2343","2344","2345","2346","2347","2348","2349","2350","2351","2352","2353","2354","2355","2356","2357","2358","2359","2360","2361","2362","2363","2364","2365","2366","2367","2368","2369","2370","2371","2372","2373","2374","2375","2376","2377","2378","2379","2380","2381","2382","2383","2384","2385","2386","2387","2388","2389","2390","2391","2392","2393","2394","2395","2396","2397","2398","2399","2400","2401","2402","2403","2404","2405","2406","2407","2408","2409","2410","2411","2412","2413","2414","2415","2416","2417","2418","2419","2420","2421","2422","2423","2424","2425","2426","2427","2428","2429","2430","2431","2432","2433","2434","2435","2436","2437","2438","2439","2440","2441","2442","2443","2444","2445","2446","2447","2448","2449","2450","2451","2452","2453","2454","2455","2456","2457","2458","2459","2460","2461","2462","2463","2464","2465","2466","2467","2468","2469","2470","2471","2472","2473","2474","2475","2476","2477","2478","2479","2480","2481","2482","2483","2484","2485","2486","2487","2488","2489","2490","2491","2492","2493","2494","2495","2496","2497","2498","2499","2500","2501","2502","2503","2504","2505","2506","2507","2508","2509","2510","2511","2512","2513","2514","2515","2516","2517","2518","2519","2520","2521","2522","2523","2524","2525","2526","2527","2528","2529","2530","2531","2532","2533","2534","2535","2536","2537","2538","2539","2540","2541","2542","2543","2544","2545","2546","2547","2548","2549","2550","2551","2552","2553","2554","2555","2556","2557","2558","2559","2560","2561","2562","2563","2564","2565","2566","2567","2568","2569","2570","2571","2572","2573","2574","2575","2576","2577","2578","2579","2580","2581","2582","2583","2584","2585","2586","2587","2588","2589","2590","2591","2592","2593","2594","2595","2596","2597","2598","2599","2600","2601","2602","2603","2604","2605","2606","2607","2608","2609","2610","2611","2612","2613","2614","2615","2616","2617","2618","2619","2620","2621","2622","2623","2624","2625","2626","2627","2628","2629","2630","2631","2632","2633","2634","2635","2636","2637","2638","2639","2640","2641","2642","2643","2644","2645","2646","2647","2648","2649","2650","2651","2652","2653","2654","2655","2656","2657","2658","2659","2660","2661","2662","2663","2664","2665","2666","2667","2668","2669","2670","2671","2672","2673","2674","2675","2676","2677","2678","2679","2680","2681","2682","2683","2684","2685","2686","2687","2688","2689","2690","2691","2692","2693","2694","2695","2696","2697","2698","2699","2700","2701","2702","2703","2704","2705","2706","2707","2708","2709","2710","2711","2712","2713","2714","2715","2716","2717","2718","2719","2720","2721","2722","2723","2724","2725","2726","2727","2728","2729","2730","2731","2732","2733","2734","2735","2736","2737","2738","2739","2740","2741","2742","2743","2744","2745","2746","2747","2748","2749","2750","2751","2752","2753","2754","2755","2756","2757","2758","2759","2760","2761","2762","2763","2764","2765","2766","2767","2768","2769","2770","2771","2772","2773","2774","2775","2776","2777","2778","2779","2780","2781","2782","2783","2784","2785","2786","2787","2788","2789","2790","2791","2792","2793","2794","2795","2796","2797","2798","2799","2800","2801","2802","2803","2804","2805","2806","2807","2808","2809","2810","2811","2812","2813","2814","2815","2816","2817","2818","2819","2820","2821","2822","2823","2824","2825","2826","2827","2828","2829","2830","2831","2832","2833","2834","2835","2836","2837","2838","2839","2840","2841","2842","2843","2844","2845","2846","2847","2848","2849","2850","2851","2852","2853","2854","2855","2856","2857","2858","2859","2860","2861","2862","2863","2864","2865","2866","2867","2868","2869","2870","2871","2872","2873","2874","2875","2876","2877","2878","2879","2880","2881","2882","2883","2884","2885","2886","2887","2888","2889","2890","2891","2892","2893","2894","2895","2896","2897","2898","2899","2900","2901","2902","2903","2904","2905","2906","2907","2908","2909","2910","2911","2912","2913","2914"],[20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,20,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,22,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,26,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,11,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,14,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,18,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,19,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,2,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,21,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,23,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,12,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,15,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,17,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,24,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,25,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,8,9,9,9,9,9,9,9,9,9,9,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,10,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,13,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16,16],["DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","DHH 704-608","DHH 704-608","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","ELL 106-808","ELL 106-808","ELL 106-808","DMM 607-701","DMM 607-701","DMM 607-701","EMH 413-606","EMH 413-606","EMH 413-606","DML 821-510","DML 821-510","DML 821-510","DML 821-510","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","DMH 803-102","DMH 803-102","DMH 803-102","EMM 802-415","EMM 802-415","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EML 915-111","EML 915-111","EML 915-111","EML 915-111","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","DLL 112-103","DLL 112-103","DLL 112-103","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","DMH 803-102","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DML 821-510","DML 821-510","DML 821-510","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","ELL 106-808","ELL 106-808","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DMH 803-102","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EML 915-111","EMH 413-606","EMH 413-606","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DML 821-510","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMM 607-701","DMH 803-102","DMH 803-102","DMH 803-102","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","EHH 914-201","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","DLL 112-103","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","ELL 106-808","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","DHH 704-608","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMH 413-606","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EMM 802-415","EML 915-111","EML 915-111","EML 915-111","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","EHH 917-918","EHH 917-918","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EML 406-807","EML 406-807","EML 406-807","EML 406-807","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","EML 406-807","EML 406-807","EML 406-807","EML 406-807","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","DMM 804-816","DMM 804-816","DMM 804-816","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","DMM 804-816","DMM 804-816","DMM 804-816","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","EML 406-807","EML 406-807","DHH 214-425","DHH 214-425","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EMH 422-702","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EML 406-807","EML 406-807","EML 406-807","EML 406-807","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","EMH 422-702","EMH 422-702","EMH 422-702","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","EML 406-807","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","ELL 1004-41","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EMM 1003-40","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","EHH 917-918","DHH 214-425","DHH 214-425","DHH 214-425","DHH 214-425","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DMM 804-816","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DLL 508- 51","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DML 907-301","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DMH 414-609","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","DMM 412-602","DMM 412-602","DMM 412-602","EML 213-817","EML 213-817","EML 213-817","EHH 417-913","EHH 417-913","EMH 912-703","EMH 912-703","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EML 213-817","EML 213-817","EML 213-817","EML 213-817","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DML 812-616","DMH 706-909","DMH 706-909","DMH 706-909","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EML 213-817","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMM 418 -61","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","EMH 912-703","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","DHH 611-210","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","ELL 705-100","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","DLL 903-302","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","EHH 417-913","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMH 706-909","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DMM 412-602","DML 812-616","DML 812-616","DML 812-616"],["AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_RIGHT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT","AOI_LEFT_TEXT"],[1.619,0.063,0.476,1.153,0.073,11.753,14.136,3.873,0.13,0.32,0.723,0.09,0.06,0.083,0.557,8.586,0.083,1.211,0.18,2.608,0.07,1.816,0.07,0.503,2.044,0.137,3.109,0.187,1.924,0.13,7.643,0.073,1.941,0.837,0.123,0.093,0.25,0.307,0.083,0.063,1.644,0.14,3.875,0.343,2.009,2.843,17.25,2.28,0.367,0.263,0.22,1.126,0.08,0.087,0.954,0.513,0.143,0.89,0.21,0.64,0.067,1.347,0.09,11.903,0.24,1.991,0.263,3.874,0.36,3.913,0.453,1.845,9.548,0.17,3.114,0.14,2.167,6.585,0.463,9.931,0.147,0.13,0.11,0.163,0.077,0.514,0.227,12.453,0.077,1.414,7.495,0.467,0.41,0.387,0.123,10.358,0.227,1.907,0.167,0.483,24.655,0.143,37.548,24.024,1.767,0.17,21.878,26.739,15.141,3.787,0.533,36.824,0.664,0.27,41.872,4.927,0.636,2.293,4.36,0.23,1.536,0.193,2.443,0.19,1.784,0.217,1.319,0.277,1.841,0.16,1.603,0.253,1.667,0.103,1.347,0.18,2.8,0.203,1.77,0.2,1.706,0.38,3.002,0.313,2.526,0.297,1.193,0.347,0.797,0.263,1.411,0.133,4.505,0.17,2.842,0.277,2.298,0.157,1.15,0.133,1.821,0.227,3.409,0.313,9.623,1.713,0.236,0.62,0.06,0.17,0.077,0.319,0.647,0.163,0.257,4.825,0.24,1.323,0.17,3.301,0.103,1.702,0.473,0.934,0.197,1.123,0.26,2.457,3.737,0.09,0.697,0.063,1.087,0.361,0.293,0.913,3.567,0.197,1.917,0.117,0.996,0.25,0.738,0.43,1.679,0.203,3.609,0.243,2.406,0.184,1.843,0.227,3.477,0.367,5.637,0.877,1.75,1.084,12.473,1.017,1.316,1.101,1.691,0.26,1.697,0.26,1.519,0.457,2.796,0.394,1.332,0.356,2.707,0.263,3.471,0.207,5.917,0.44,0.327,0.087,2.835,20.444,1.097,0.25,1.757,0.537,0.986,17.358,0.383,2.153,0.12,1.693,0.167,2.564,0.19,4.503,0.204,1.293,0.06,1.92,0.14,1.109,0.147,2.253,0.167,1.325,0.1,4.141,0.183,3.382,18.493,7.283,0.737,1.787,0.647,15.001,12.641,0.2,2.797,0.09,0.489,0.183,0.323,0.57,0.083,0.4,0.41,0.09,0.857,0.72,40.937,34.538,9.935,1.134,1.991,33.287,36.842,0.929,2.301,1.03,41.067,52.597,5.477,0.523,0.866,1.183,0.093,32.953,0.07,12.261,46.205,1.463,0.323,0.12,0.12,0.073,0.317,2.157,1.178,1.549,0.513,1.141,0.12,1.066,0.72,0.456,0.11,2.048,1.229,15.418,0.236,0.21,0.067,0.654,4.981,5.273,1.695,0.92,2.277,0.137,0.916,5.1,0.554,0.487,0.744,1.617,0.75,1.406,4.694,1.891,2.891,0.497,0.37,4.503,0.574,25.824,21.466,5.22,1.587,49.722,2.459,0.14,35.148,2.913,0.384,32.522,8.334,2.223,0.353,0.207,0.858,3.587,4.153,0.409,1.463,0.153,2.182,0.303,0.373,0.213,0.704,2.556,3.827,0.117,3.686,64.721,33.827,16.91,0.367,1.717,12.367,31.851,49.039,1.584,0.24,5.763,7.363,34.146,41.264,0.71,0.157,0.177,0.977,54.176,0.257,1.022,60.754,1.342,0.797,11.034,0.073,27.324,29.651,4.712,1.23,8.051,5.11,36.51,0.16,1.61,27.084,0.406,10.7,3,0.927,13.79,0.834,3.652,0.127,1.993,3.485,5.354,0.28,14.176,0.534,4.378,0.163,6.035,32.453,2.54,0.207,26.5,28.787,7.012,0.607,0.16,0.07,10.68,0.663,34.087,12.584,26.162,0.847,0.817,45.271,31.888,0.493,0.387,0.617,0.314,0.9,18.185,18.84,19.93,3.067,0.657,0.813,17.563,27.355,33.071,22.95,1.063,6.481,12.948,0.183,0.534,0.483,7.08,0.887,57.648,65.233,5.723,0.82,10.38,26.269,0.113,0.267,33.92,1.394,5.505,0.447,0.323,0.567,0.083,0.37,0.063,0.527,0.213,35.529,33.762,0.608,0.663,0.486,6.567,1.29,11.781,4.617,19.905,12.535,6.101,0.277,0.766,32.926,46.549,19.711,42.219,13.809,0.083,0.363,25.911,31.441,1.274,0.577,1.557,27.45,35.839,2.358,0.42,0.19,0.1,25.41,0.067,1.359,0.097,3.646,0.177,0.32,0.13,0.523,0.614,0.07,7.835,0.093,0.54,23.095,12.255,1.167,13.777,13.259,2.956,1.117,15.494,15.448,15.25,0.107,21.142,5.614,4.095,7.86,18.809,27.8,8.969,24.266,0.073,1.693,7.655,1.144,0.07,23.497,16.098,1.033,25.432,0.093,13.333,2.346,0.32,16.978,0.357,28.282,4.587,12.371,1.168,0.916,2.209,0.604,0.18,0.553,0.167,0.257,0.177,1.303,0.594,2.287,0.12,3.933,0.163,1.464,0.153,18.37,10.894,20.617,1.024,4.66,10.865,0.16,0.36,0.639,1.23,0.09,0.16,0.663,0.757,0.853,0.848,0.463,0.727,0.09,1.197,17.015,3.817,0.14,0.233,1.863,1.423,0.454,0.4,0.324,13.771,0.07,0.594,1.376,9.069,0.474,0.477,15.204,1.766,0.25,2.044,0.349,1.909,0.177,3.93,0.24,0.14,0.48,3.27,0.55,0.163,1.101,0.08,1.293,0.157,1.003,0.183,1.153,3.098,0.163,0.78,9.423,17.561,1.316,1.643,1.437,0.117,1.746,0.443,0.446,8.274,15.683,6.241,0.187,1.143,0.23,1.679,0.107,0.936,0.133,1.973,1.036,2.002,4.774,2.952,0.26,0.654,0.08,1.075,0.293,0.99,0.24,0.607,18.608,12.813,0.227,7.475,3.05,0.22,1.745,6.039,0.986,7.685,0.083,1.405,0.267,1.883,0.2,0.8,0.897,0.911,8.061,3.729,0.32,0.313,0.22,0.69,0.447,1.03,7.156,0.354,18.646,31.102,0.333,3.188,24.033,0.21,4.083,13.567,1.377,0.153,0.53,0.717,21.216,42.355,0.473,3.533,0.53,37.634,0.596,7.234,0.966,1.357,4.568,36.339,46.437,56.133,1.197,0.163,0.07,53.41,0.613,22.493,20.6,0.26,15.773,0.5,32.396,19.033,2.933,58.102,0.14,10.991,0.29,0.45,5.493,26.979,33.992,0.24,1.81,6.467,1.532,0.18,0.103,41.743,1.039,0.456,55.821,1.957,56.748,16.093,1.578,31.392,35.078,0.8,7.665,0.62,0.317,0.754,0.12,0.672,0.473,1.816,3.615,0.707,49.876,0.393,0.797,1.409,1.234,37.575,0.147,0.58,1.176,55.5,36.815,1.214,2.11,0.604,1.782,0.763,1.638,0.093,0.153,25.747,0.407,1.367,0.344,3.192,0.083,6.047,0.326,5.984,0.314,4.025,0.733,41.164,0.721,0.896,1.158,0.303,0.23,0.213,9.367,22.16,1.387,0.18,1.243,0.41,0.543,11.011,4.535,3.104,7.383,22.56,4.355,0.543,0.303,2.389,2.233,6.926,2.443,2.626,0.187,6.53,0.163,0.193,0.09,1.863,0.243,2.417,0.163,27.545,0.103,8.976,52.559,5.949,0.17,1.307,0.237,0.093,2.218,0.286,24.174,0.14,4.407,0.337,3.157,0.067,7.381,0.097,4.89,0.43,7.896,39.072,27.907,0.067,0.4,0.796,50.263,0.147,5.291,53.687,0.734,0.2,1.268,0.063,3.475,0.067,0.917,0.067,0.886,6.932,44.171,0.323,0.587,1.71,18.042,0.513,0.54,36.625,0.566,1.717,1.862,2.291,0.26,0.48,0.183,2.626,0.669,1.23,0.424,0.807,0.19,0.57,0.623,1.059,1.033,1.488,42.69,24.871,2.172,1.881,17.91,5.417,2.558,1.128,0.213,0.627,0.431,0.22,0.326,0.754,0.367,0.283,0.464,0.23,0.513,0.43,2.76,0.33,0.7,0.52,0.677,0.393,2.008,2.357,29.999,24.229,4.58,0.444,0.763,6.71,0.616,2.38,3.444,3.012,0.573,35.613,33.757,1.557,2.72,0.237,3.472,2.838,1.251,1.011,0.153,0.224,0.627,2.273,1.016,0.589,1.371,11.614,8.81,0.737,0.127,0.363,0.62,0.9,0.337,1.578,28.72,13.854,7.035,2.627,0.647,0.376,0.426,6.012,2.704,26.711,24.531,2.93,0.233,1.006,0.203,6.267,0.95,0.493,1.173,16.724,28.786,2.574,0.32,0.806,1.413,0.557,4.732,3.944,0.2,0.894,1.54,0.762,20.874,25.597,12.345,9.437,1.074,1.358,6.44,14.807,3.803,5.161,4.802,9.568,0.962,17.797,31.08,9.719,0.486,3.814,0.077,1.17,0.693,0.306,11.673,1.254,8.35,0.499,1.406,1.586,0.284,0.187,0.087,0.107,0.093,0.193,1.57,0.34,0.277,0.167,2.218,0.083,2.875,10.524,0.904,6.314,0.24,0.083,0.14,1.07,0.649,1.966,0.277,5.429,0.589,4.514,0.107,0.557,0.227,0.742,0.84,8.022,0.733,1.322,47.367,15.931,0.684,0.912,0.267,16.334,9.388,0.5,0.173,1.038,0.32,7.842,0.083,0.263,0.063,0.257,0.316,1.468,0.09,1.852,4.285,1.023,1.343,0.143,0.07,0.093,43.689,0.903,0.577,29.66,3.898,13.508,13.852,0.147,0.81,0.213,6.659,0.917,9.746,0.113,0.143,0.34,1.019,0.313,10.444,0.494,0.09,3.538,8.166,0.39,1.013,0.307,13.384,0.531,0.077,14.189,0.346,15.343,12.315,0.906,0.771,0.49,14.334,9.307,0.52,1.847,0.657,1.06,28.625,29.787,1.353,1.267,3.017,1,38.993,20.022,0.17,0.107,6.095,0.243,18.358,22.619,3.623,5.728,0.453,17.783,8.565,15.584,20.569,0.147,0.14,22.27,15.35,0.55,22.544,3.832,1.1,23.594,0.274,1.1,24.704,21.332,0.533,2.35,2.434,0.67,0.27,0.323,0.23,2.054,0.243,0.727,0.543,60.08,25.997,0.33,0.144,124.634,78.51,0.197,0.837,5.206,0.797,2.294,3.06,9.516,0.494,1.443,106.387,1.15,0.586,43.001,0.57,1.314,0.804,78.818,52.751,0.924,0.613,7.243,0.073,56.403,0.85,19.991,69.099,2.385,3.896,0.097,37.798,2.35,0.287,0.824,0.573,1.546,0.337,0.94,5.59,0.286,92.962,39.951,1.395,0.643,0.083,0.22,5.315,0.097,0.403,1.469,0.903,0.347,0.107,0.623,0.22,0.377,1.214,3.18,0.597,9.239,1.989,0.513,13.023,0.28,11.772,1.153,2.061,1.126,20.311,0.9,48.642,72.513,1.333,0.16,1.174,51.399,0.103,29.019,0.07,16.68,106.8,1.46,1.104,46.385,0.34,0.353,86.934,27.353,1.739,10.215,1.563,0.883,0.307,0.13,1.013,0.353,26.765,24.584,0.35,0.37,0.62,0.12,47.06,31.538,0.53,49.021,34.99,2.666,2.024,0.497,0.14,0.097,37.646,36.771,1.41,0.097,3.446,0.293,67.36,29.896,0.173,10.543,1.391,0.506,0.18,49.99,39.074,0.333,0.15,39.233,23.294,0.376,0.23,3.186,1.793,1.029,0.177,0.293,44.512,51.689,49.057,45.084,40.561,58.834,5.382,1.097,2.226,2.723,1.349,0.31,0.194,15.607,0.083,3.147,0.3,3.515,0.143,0.54,0.113,0.083,0.073,2.167,0.2,7.339,0.567,2.494,0.23,30.516,0.733,0.652,0.137,1.213,0.327,0.434,0.143,1.925,0.48,0.257,0.087,0.92,0.567,0.136,0.08,0.297,0.11,0.554,1.256,0.336,0.56,0.57,0.137,0.077,0.921,0.49,0.608,3.062,0.216,0.167,0.117,0.334,1.133,0.213,3.451,0.44,0.09,10.77,0.287,2.564,0.08,2.249,0.123,2.634,0.54,2.082,6.495,0.883,0.08,0.794,0.777,1.647,0.277,2.001,0.073,4.235,2.121,0.41,6.788,0.077,3.565,0.207,0.293,0.463,0.08,20.858,0.94,0.336,0.08,0.19,0.587,0.556,0.337,0.563,0.618,3.874,0.157,1.24,0.706,2.506,0.07,0.183,1.163,1.634,0.363,1.22,0.667,0.117,11.404,0.223,1.475,0.436,0.981,0.183,49.793,8.863,6.131,0.617,1.501,3.35,0.414,4.261,0.65,2.018,3.311,3.698,0.98,30.965,0.3,13.208,0.163,4.025,0.723,15.711,4.065,14.185,0.193,0.536,4.98,2.826,0.397,0.263,2.51,1.123,0.333,0.08,20.389,0.257,10.123,0.15,2.016,0.157,8.998,0.8,3.232,59.172,7.324,14.44,0.62,1.976,0.223,7.046,5.111,0.42,0.41,2.297,2.155,1.62,13.922,8.274,0.393,6.234,0.133,29.397,0.063,9.5,0.347,0.601,2.006,0.143,0.157,0.073,0.62,0.2,1.504,0.922,3.671,0.817,0.233,0.206,2.931,4.068,0.264,1.37,0.203,1.882,4.068,0.123,2.049,0.133,0.087,0.143,0.5,2.117,8.763,0.07,0.886,0.69,0.087,10.347,0.881,2.939,1.973,0.17,28.626,1.646,0.153,0.58,0.257,0.177,0.567,6.537,4.7,1.489,0.617,2.59,1.636,2.03,0.093,2.209,1.014,25.552,9.249,2.286,0.177,0.17,0.433,0.253,0.963,9.137,2.13,4.807,12.432,0.903,9.822,0.476,4.524,0.443,2.804,0.27,0.117,0.639,0.794,0.623,1.104,0.557,0.891,0.51,0.313,22.281,0.207,4.309,0.07,9.469,0.27,0.993,0.1,1.207,33.12,1.6,0.58,4.65,0.08,2.258,1.405,1.26,0.07,0.484,0.127,0.16,0.123,1.636,35.499,53.123,0.563,0.103,2.764,0.92,0.837,2.353,0.313,0.663,0.197,0.74,6.796,8.87,0.15,0.463,0.08,3.125,0.263,38.773,26.433,0.12,0.267,52.687,27.836,0.207,7.908,0.123,0.347,0.263,43.385,51.144,11.204,0.688,7.96,44.858,54.723,0.19,10.024,1.044,9.268,11.487,15.179,0.266,0.596,0.713,68.014,0.357,1.79,79.743,15.6,0.33,16.662,1.077,0.823,6.628,0.337,55.17,36.556,0.073,16.851,14.161,1.071,12.141,2.155,0.087,0.714,4.084,30.843,15.077,10.666,1.676,0.347,13.185,74.662,38.891,5.26,1.246,8.187,0.217,0.25,1.626,5.667,7.653,0.693,0.6,6.551,0.823,3.242,0.257,0.443,0.986,27.197,12.166,1.417,5.513,3.547,0.293,0.871,0.11,0.621,0.187,0.937,0.664,0.657,0.323,45.503,38.303,35.421,40.78,5.252,3.359,0.43,1.613,0.64,0.29,3.286,0.167,0.496,0.143,1.03,32.58,40.635,1.961,15.585,2.195,0.564,58.475,41.171,15.744,1.291,15.562,0.896,27.701,1.493,16.177,30.165,0.303,42.804,35.015,6.934,0.433,36.997,31.484,5.053,0.19,0.29,14.937,0.434,12.393,16.028,3.641,0.659,36.131,26.332,5.795,0.776,0.407,43.899,21.833,21.846,2.484,0.28,0.96,54.511,61.735,2.553,0.744,0.99,1.577,1.621,0.2,0.52,0.993,4.906,0.743,0.34,0.706,0.597,0.28,0.71,1.872,0.926,0.6,0.327,1.59,3.898,2.118,1.317,0.19,0.143,0.287,1.694,0.307,42.905,31.249,2.909,0.373,0.324,0.944,1.277,0.866,0.557,52.368,71.349,1.143,0.137,0.814,4.361,0.464,59,47.183,1.424,83.474,50.861,1.384,0.4,2.753,37.195,43.3,0.163,62.023,79.55,73.437,91.751,0.673,49.167,76.815,63.723,32.585,5.182,1.349,0.36,0.133,1.2,2.094,0.33,1.061,56.994,0.55,101.517,12.347,0.34,4.383,23.734,20.609,1.476,4.017,9.049,0.093,3.794,0.336,0.28,52.086,23.019,39.921,4.542,0.073,0.347,0.193,93.126,0.984,37.542,0.233,0.257,0.26,0.35,2.39,0.55,72.765,48.538,46.24,10.713,0.58,0.907,10.029,13.993,5.838,6.103,3.401,0.374,50.832,40.434,0.223,38.367,0.157,0.14,0.263,0.377,58.212,0.643,2.757,0.743,0.827,59.39,1.586,0.577,15.45,28.039,0.733,1.838,2.434,0.463,3.881,2.841,0.18,3.219,0.3,0.35,0.11,53.315,1.476,0.413,88.418,0.09,8.048,0.934,0.187,0.36,1.306,56.324,45.304,0.153,6.271,5.915,1.176,3.968,0.337,1.611,0.36,3.24,2.637,0.103,10.389,2.299,1.757,4.716,2.37,0.95,0.743,0.979,0.66,2.284,94.928,0.33,1.535,22.151,0.447,159.26,120.142,2.181,0.113,5.467,0.553,8.039,0.416,1.938,0.237,27.546,1.593,0.683,0.613,0.163,1.204,0.31,3.073,0.34,0.157,0.237,0.437,0.546,2.226,0.153,4.543,0.1,0.541,0.206,0.273,10.652,0.32,21.883,0.16,15.312,0.74,0.267,1.232,0.6,0.16,0.32,0.409,0.473,0.077,0.68,1.283,0.367,2.454,0.11,6.159,4.517,0.237,0.549,0.08,0.777,42.33,0.396,0.25,0.08,0.55,0.077,0.327,0.237,0.08,0.08,0.303,0.236,0.55,3.589,8.217,1.744,0.363,0.09,1.879,5.402,0.254,0.33,1.572,0.23,7.083,0.107,4.224,0.163,40.158,0.476,3.422,0.077,0.133,0.137,0.063,0.443,1.032,5.846,0.667,1.577,0.724,3.206,0.24,0.286,0.153,2.269,0.257,0.133,0.063,0.577,0.19,0.843,0.186,1.23,0.187,1.206,0.067,2.677,0.123,2.241,0.17,2.337,0.107,1.426,0.52,1.743,0.607,1.424,0.326,2.932,0.96,0.103,0.46,0.107,1.453,0.067,0.601,0.947,0.18,0.123,0.833,57.814,1.327,0.15,1.604,0.093,0.543,0.233,1.711,0.557,8.119,0.167,1.69,0.594,0.65,0.08,2.823,0.33,14.037,0.077,2.819,0.117,1.041,2.014,0.077,0.11,1.096,0.163,1.856,0.39,0.077,0.426,0.233,0.073,0.374,0.51,0.253,0.13,0.12,16.963,0.822,0.083,0.49,0.18,0.31,0.38,0.163,0.09,0.154,0.473,0.557,0.314,0.64,0.47,0.09,0.467,0.08,0.25,1.161,0.307,1.014,17.82,1.133,0.143,0.347,1.327,36.058,1.579,0.083,0.479,0.163,4.785,0.433,0.797,1.889,45.756,2.654,0.597,2.924,0.317,1.153,0.077,0.097,2.987,1.434,0.223,2.349,1.361,8.512,0.093,0.717,0.283,0.14,0.337,0.457,1.593,0.577,27.661,15.518,43.709,0.796,0.257,1.577,1.25,0.153,2.073,0.107,0.803,0.697,0.1,0.177,0.173,0.077,0.49,0.08,33.873,0.263,1.815,0.293,5.162,2.778,1.406,0.173,34.139,1.34,0.073,0.73,0.327,2.413,1.307,0.077,8.78,0.09,0.773,1.127,0.477,3.305,0.277,0.573,0.073,1.846,0.797,1.296,0.723,0.684,0.477,0.267,0.077,10.037,0.29,5.472,0.097,0.323,0.25,17.834,4.045,18.002,0.353,17.951,1.887,2.05,1.66,0.407,0.384,2.883,0.523,2.373,0.81,0.093,0.173,4.197,0.077,0.09,0.187,14.851,0.263,8.75,0.123,0.67,4.208,0.363,1.158,0.163,2.59,0.197,0.227,0.173,1.02,2.849,0.383,1.717,0.433,1.396,0.343,1.09,0.263,9.075,0.093,0.143,0.55,0.21,6.019,33.165,2.719,1.401,3.61,0.133,1.1,1.3,0.153,0.323,1.857,0.08,0.482,59.848,33.339,0.77,1.697,0.75,1.33,0.15,0.433,0.293,0.68,0.927,0.077,0.09,0.063,0.733,0.453,0.457,3.055,0.273,4.285,0.29,1.964,0.333,0.719,3.205,0.123,3.127,0.11,2.704,0.8,3.581,0.2,0.687,0.293,2.401,0.563,15.415,0.09,1.793,0.546,0.234,1.836,0.9,0.167,2.048,0.133,1.35,0.253,0.133,1.071,0.193,0.287,0.233,0.123,0.988,3.071,0.343,1.157,0.063,0.33,2.635,30.737,0.22,3.346,0.14,0.899,2.14,11.702,54.176,1.357,2.688,0.213,5.627,1.08,1.279,4.401,2.617,5.186,5.843,0.377,0.3,0.73,1.05,0.296,0.6,0.167,1.152,1.653,55.312,52.311,2.52,0.2,0.46,0.42,0.546,0.063,0.861,0.227,0.961,0.52,0.476,0.437,0.61,0.127,63.783,1.981,8.539,44.9,0.373,41.49,85.552,2.533,1.84,0.463,0.53,1.86,0.707,0.443,0.337,0.967,1.753,0.557,0.79,1.628,3.687,0.346,0.28,0.13,0.23,0.634,0.273,0.687,0.357,1.426,4.253,8.42,13.282,0.37,0.52,7.702,4.654,3.343,1.054,0.673,2.942,0.11,0.427,2.443,0.943,2.68,0.437,0.21,8.413,20.692,0.32,0.11,10.993,13.362,0.083,5.169,11.38,0.32,0.39,0.829,23.5,1.673,11.973,42.073,16.928,13.364,1.116,0.32,3.087,35.342,0.117,0.173,1.885,0.833,56.134,2.459,1.246,0.213,0.407,0.95,6.715,0.093,40.95,56.318,1.13,0.127,0.386,0.3,43.353,6.742,25.34,18.776,13.901,2.54,3.29,31.146,1.946,0.453,2.714,8.358,7.766,7.506,1.147,1.304,13.286,46.842,23.31,0.69,12.628,2.519,7.75,21.425,0.127,1.16,7.474,1.299,5.088,1.8,31.877,29.31,3.445,0.296,27.703,10.879,0.613,0.76,2.171,0.707,4.071,3.198,1.068,1.341,3.889,1.743,2.847,3.159,48.41,35.441,0.123,2.161,0.34,0.707,0.914,0.167,0.187,0.253,0.664,0.553,0.177,0.333,0.406,0.29,0.217,0.39,3.537,35.276,0.487,8.086,1.797,40.985,0.357,54.023,55.715,2.156,10.9,0.7,77.944,19.552,131.904,0.653,11.755,15.103,4.544,1.04,9.865,5.75,0.753,0.696,0.42,0.353,75.286,101.823,0.437,1.007,2.174,14.377,0.143,0.534,3.21,0.297,3.219,3.967,0.093,0.597,39.576,49.113,0.734,1.229,2.007,1.059,0.426,6.223,25.091,17.174,3.486,1.06,0.35,0.93,0.889,0.796,57.284,0.27,0.374,0.377,53.032,52.477,0.859,11.341,2.932,0.85,0.1,0.663,1.56,2.041,1.931,2.334,0.52,2.34,0.401,0.237,1.559,1.44,0.3,74.282,0.841,0.447,62.099,13.668,2.764,1.614,10.483,2.234,0.49,0.21,0.396,1.987,61.285,0.38,2.092,0.819,0.454,68.786,1.512,0.686,14.082,0.36,50.054,81.523,0.14,5.166,12.74,0.174,0.614,60.19,52.283,20.346,2.86,0.538,37.036,2.601,0.443,13.454,1.824,35.421,4.855,1.378,0.373,0.493,1.007,32.317,36.976,0.564,5.109,2.499,0.16,8.561,52.64,24.631,0.414,0.386,0.8,51.951,0.107,12.102,15.009,1.377,0.367,1.011,24.01,1.36,0.183,41.317,2.081,2.306,60.686,4.738,3.134,3.789,0.073,1.887,41.925,11.195,3.005,49.305,7.025,0.167,2.243,0.487,32.952,24.847,1.45,9.179,4.607,1.394,42.212,12.255,18.325,1.097,24.387,2.161,6.919,0.64,0.894,0.927,1.044,50.254,0.16,0.599,0.586,0.567,42.725,2.134,0.097,0.2,1.246,59.624,41.588,4.727,0.304,0.23,0.48,1.184,0.597,1.067,11.147,0.07,13.228,0.097,1.747,0.143,2.615,0.08,4.353,0.22,0.083,0.197,0.073,0.08,0.413,0.227,4.623,0.794,0.24,0.12,0.591,0.2,1.26,0.2,0.25,0.45,0.476,0.083,0.367,0.187,0.5,0.137,3.675,0.07,0.496,4.619,0.117,0.83,0.333,1.148,0.29,0.09,0.247,0.123,0.18,0.147,0.163,0.083,1.106,0.223,1.474,0.467,0.4,0.103,0.087,0.277,0.806,0.799,0.28,0.904,0.317,0.51,0.316,0.593,0.48,0.153,0.976,1.165,0.306,0.89,0.724,0.1,0.09,0.826,0.996,0.153,0.377,0.946,1.024,0.28,0.897,1.46,0.514,0.977,1.066,0.43,0.253,0.924,0.177,0.714,0.183,0.473,0.534,0.914,0.14,0.087,0.577,0.09,0.23,0.09,0.15,0.18,0.6,0.257,0.253,0.2,0.18,0.456,0.157,0.077,0.227,0.117,0.464,0.15,0.173,2.684,0.07,0.207,0.117,0.137,0.187,0.38,0.193,0.18,0.65,1.027,0.407],[7.38956395367764,4.14313472639153,6.16541785423142,7.05012252026906,4.29045944114839,9.37186380613285,9.55648001399953,8.26178467951475,4.86753445045558,5.76832099579377,6.58340922215876,4.49980967033027,4.0943445622221,4.4188406077966,6.32256523992728,9.05788824878451,4.4188406077966,7.09920174355309,5.19295685089021,7.86633892304654,4.24849524204936,7.50439155916124,4.24849524204936,6.22059017009974,7.6226639513236,4.91998092582813,8.04205641005875,5.23110861685459,7.56216163122565,4.86753445045558,8.94154547524288,4.29045944114839,7.57095858316901,6.72982407048948,4.81218435537242,4.53259949315326,5.52146091786225,5.7268477475872,4.4188406077966,4.14313472639153,7.40488757561612,4.9416424226093,8.26230094178745,5.83773044716594,7.60539236481493,7.952615111651,9.75556742245951,7.73193072194849,5.90536184805457,5.57215403217776,5.39362754635236,7.02642680869964,4.38202663467388,4.46590811865458,6.86066367144829,6.24027584517077,4.96284463025991,6.79122146272619,5.34710753071747,6.46146817635372,4.20469261939097,7.20563517641036,4.49980967033027,9.38454574816788,5.48063892334199,7.5963923040642,5.57215403217776,8.26204284396694,5.88610403145016,8.27205962221041,6.11589212548303,7.52023455647463,9.16408698745872,5.13579843705026,8.04366335239394,4.9416424226093,7.68109900153636,8.79254961417738,6.13772705408623,9.20341645690336,4.99043258677874,4.86753445045558,4.70048036579242,5.09375020080676,4.34380542185368,6.24222326545517,5.4249500174814,9.42971683672115,4.34380542185368,7.25417784645652,8.9219914105367,6.1463292576689,6.01615715969835,5.95842469302978,4.81218435537242,9.24551444698384,5.4249500174814,7.55328660560042,5.11799381241676,6.18001665365257,10.1127349986582,4.96284463025991,10.5333753934569,10.0868086096632,7.4770384723197,5.13579843705026,9.99323684467974,10.1938784527988,9.62516157500837,8.2393294279018,6.27852142416584,10.5139050854971,6.49828214947643,5.59842195899838,10.6423726247699,8.50248556254396,6.45519856334012,7.7376162828579,8.38022733634308,5.4380793089232,7.33693691370762,5.26269018890489,7.80098207125774,5.24702407216049,7.48661331313996,5.37989735354046,7.18462915271731,5.62401750618734,7.51806418123308,5.07517381523383,7.37963215260955,5.53338948872752,7.41878088275079,4.63472898822964,7.20563517641036,5.19295685089021,7.9373746961633,5.31320597904179,7.47873482556787,5.29831736654804,7.44190672805162,5.94017125272043,8.00703401219341,5.74620319054015,7.83439230291044,5.6937321388027,7.08422642209792,5.84932477994686,6.68085467879022,5.57215403217776,7.25205395185281,4.89034912822175,8.41294317004244,5.13579843705026,7.95226330865705,5.62401750618734,7.7397944584087,5.05624580534831,7.0475172213573,4.89034912822175,7.50714107972761,5.4249500174814,8.1341742721379,5.74620319054015,9.1719113453564,7.44600149832412,5.46383180502561,6.42971947803914,4.0943445622221,5.13579843705026,4.34380542185368,5.76519110278484,6.4723462945009,5.09375020080676,5.54907608489522,8.48156601377309,5.48063892334199,7.18765716411496,5.13579843705026,8.10198073185319,4.63472898822964,7.43955930913332,6.15909538849193,6.83947643822884,5.28320372873799,7.02375895473844,5.56068163101553,7.80669637252118,8.22603842948548,4.49980967033027,6.54678541076052,4.14313472639153,6.99117688712121,5.88887795833288,5.68017260901707,6.81673588059497,8.17948018535889,5.28320372873799,7.55851674304564,4.76217393479776,6.9037472575846,5.52146091786225,6.60394382460047,6.06378520868761,7.42595365707754,5.31320597904179,8.19118600464279,5.49306144334055,7.78572089653462,5.21493575760899,7.51914995766982,5.4249500174814,8.15392513200786,5.90536184805457,8.63710728808157,6.77650699237218,7.46737106691756,6.98841318199959,9.43132158712571,6.92461239604856,7.18235211188526,7.00397413672268,7.43307534889858,5.56068163101553,7.43661726523423,5.56068163101553,7.32580750259577,6.12468339089421,7.9359451033537,5.97635090929793,7.19443685110033,5.87493073085203,7.9035962896143,5.57215403217776,8.15219801586179,5.33271879326537,8.68558484267669,6.08677472691231,5.78996017089725,4.46590811865458,7.94979721616185,9.92544471988817,7.00033446027523,5.52146091786225,7.4713630881871,6.28599809450886,6.89365635460264,9.76180877420419,5.94803498918065,7.67461749736436,4.78749174278205,7.43425738213314,5.11799381241676,7.84932381804056,5.24702407216049,8.41249912030157,5.31811999384422,7.16472037877186,4.0943445622221,7.56008046502183,4.9416424226093,7.01121398735037,4.99043258677874,7.72001794043224,5.11799381241676,7.18916773842032,4.60517018598809,8.32869258354557,5.20948615284142,8.12622252945853,9.82514756108488,8.89329814421792,6.60258789218934,7.48829351515943,6.4723462945009,9.61587214452889,9.44470077849556,5.29831736654804,7.93630269320196,4.49980967033027,6.19236248947487,5.20948615284142,5.77765232322266,6.3456363608286,4.4188406077966,5.99146454710798,6.01615715969835,4.49980967033027,6.75343791859778,6.5792512120101,10.6197895785626,10.4498154461446,9.20381915498592,7.0335064842877,7.5963923040642,10.412922209356,10.5143937777122,6.83410873881384,7.74109909003537,6.93731408122368,10.6229601582636,10.8704143628788,8.60831278478372,6.25958146406492,6.76388490856244,7.07580886397839,4.53259949315326,10.4028375828271,4.24849524204936,9.41417877223239,10.7408432963221,7.28824440102012,5.77765232322266,4.78749174278205,4.78749174278205,4.29045944114839,5.75890177387728,7.67647364638916,7.07157336421153,7.34536484041687,6.24027584517077,7.03966034986208,4.78749174278205,6.97166860472579,6.5792512120101,6.12249280951439,4.70048036579242,7.6246189861594,7.11395610956603,9.64329093701921,5.46383180502561,5.34710753071747,4.20469261939097,6.4831073514572,8.51338595307328,8.57035473953047,7.43543801981455,6.82437367004309,7.73061406606374,4.91998092582813,6.82001636467413,8.53699581871242,6.31716468674728,6.18826412308259,6.61204103483309,7.38832785957711,6.62007320653036,7.24850407237061,8.45404037641097,7.54486106865846,7.96935774201635,6.20859002609663,5.91350300563827,8.41249912030157,6.35262939631957,10.1590595710697,9.97422556704147,8.56025268087669,7.36960072052641,10.8142027700771,7.80751004221619,4.9416424226093,10.4673229966157,7.97693875695943,5.95064255258773,10.3896720623857,9.0280988119824,7.7066129139642,5.8664680569333,5.33271879326537,6.75460409948796,8.18507147753228,8.33158624363075,6.0137151560428,7.28824440102012,5.03043792139244,7.68799716639302,5.71373280550937,5.92157841964382,5.36129216570943,6.55677835615804,7.84619881549743,8.2498364854257,4.76217393479776,8.21229713822977,11.0778410027873,10.429014579155,9.73566044189263,5.90536184805457,7.44833386089748,9.42278691374206,10.3688240576817,10.8003711788851,7.36770857237437,5.48063892334199,8.65921345143667,8.90422273736872,10.4384007278312,10.6277457281211,6.56526497003536,5.05624580534831,5.17614973257383,6.88448665204278,10.8999932849329,5.54907608489522,6.92951677076365,11.0145882026566,7.20191631753163,6.68085467879022,9.30873669383238,4.29045944114839,10.2155207158517,10.29725113067,8.45786772533142,7.11476944836646,8.99355158629998,8.53895468319775,10.5053414746497,5.07517381523383,7.38398945797851,10.2066984266055,6.00635315960173,9.27799902045,8.00636756765025,6.83195356556585,9.53169897078735,6.72623340235875,8.20303024171486,4.84418708645859,7.59739632021279,8.15622332319462,8.5855992240803,5.63478960316925,9.55930567284486,6.2803958389602,8.38434727808281,5.09375020080676,8.70533113353163,10.387548167782,7.83991936001258,5.33271879326537,10.1849000119743,10.2676791753285,8.85537824604113,6.4085287910595,5.07517381523383,4.24849524204936,9.27612811251419,6.49677499018586,10.4366713589128,9.44018144473811,10.1720632552482,6.74170069465205,6.70563909486,10.7204219298701,10.3699850424526,6.20050917404269,5.95842469302978,6.42486902390539,5.74939298590825,6.80239476332431,9.80835235742109,9.84373754813035,9.89998141320684,8.02845516411425,6.48768401848461,6.70073110954781,9.77354969542174,10.2166546064979,10.4064120444059,10.0410732154887,6.96885037834195,8.77663009842772,9.46869661504613,5.20948615284142,6.2803958389602,6.18001665365257,8.86502918668777,6.78784498230958,10.9621108329879,11.085720754841,8.6522484224091,6.7093043402583,9.24763615671988,10.1761448157198,4.72738781871234,5.58724865840025,10.4317600899058,7.23993259132047,8.61341204915678,6.10255859461357,5.77765232322266,6.34035930372775,4.4188406077966,5.91350300563827,4.14313472639153,6.26720054854136,5.36129216570943,10.4781045433896,10.4270911886613,6.41017488196617,6.49677499018586,6.18620862390049,8.78981238619097,7.16239749735572,9.37424334324612,8.43750042250699,9.89872623543442,9.43628001059239,8.71620797115185,5.62401750618734,6.64118216974059,10.402017898208,10.7482608001973,9.88893213452693,10.6506256356647,9.53307583248535,4.4188406077966,5.89440283426485,10.1624228679484,10.3558680526531,7.14991683613211,6.3578422665081,7.350516171834,10.2201214469377,10.4867919649139,7.76556908109732,6.04025471127741,5.24702407216049,4.60517018598809,10.1428980763142,4.20469261939097,7.21450441415114,4.57471097850338,8.20138595523861,5.17614973257383,5.76832099579377,4.86753445045558,6.25958146406492,6.41999492814714,4.24849524204936,8.96635615479012,4.53259949315326,6.29156913955832,10.0473714228647,9.41368929596221,7.06219163228656,9.5307558140446,9.49243184611472,7.99159228206809,7.0184017990692,9.64820813119185,9.64523482410835,9.63233478203556,4.67282883446191,9.95901686232861,8.63301875692183,8.31752199628717,8.96954188542325,9.8420907576708,10.2327912996787,9.101529466118,10.0968314726118,4.29045944114839,7.43425738213314,8.94311430809178,7.04228617193974,4.24849524204936,10.0646280324086,9.68645031965368,6.94022246911964,10.1437635025906,4.53259949315326,9.49799744411546,7.76046702921342,5.76832099579377,9.73967366729791,5.87773578177964,10.2499808388677,8.43098149459717,9.42311030286283,7.06304816338817,6.82001636467413,7.70029520342012,6.40357419793482,5.19295685089021,6.31535800152233,5.11799381241676,5.54907608489522,5.17614973257383,7.17242457712485,6.38687931936265,7.73499619402278,4.78749174278205,8.27715777243181,5.09375020080676,7.28892769452126,5.03043792139244,9.81847417820917,9.29596745794362,9.93387125717057,6.93147180559945,8.44677072711969,9.29330189269053,5.07517381523383,5.88610403145016,6.45990445437753,7.11476944836646,4.49980967033027,5.07517381523383,6.49677499018586,6.62936325343745,6.74875954749168,6.7428806357919,6.13772705408623,6.58892647753352,4.49980967033027,7.08757370555797,9.74185058693501,8.24722005274523,4.9416424226093,5.4510384535657,7.52994337060159,7.26052259808985,6.11809719804135,5.99146454710798,5.78074351579233,9.53032021072713,4.24849524204936,6.38687931936265,7.22693601849329,9.11261728344757,6.16120732169508,6.16751649088834,9.62931383010914,7.47647238116391,5.52146091786225,7.6226639513236,5.85507192220243,7.55433482372575,5.17614973257383,8.27639470486331,5.48063892334199,4.9416424226093,6.17378610390194,8.0925452638913,6.30991827822652,5.09375020080676,7.00397413672268,4.38202663467388,7.16472037877186,5.05624580534831,6.91075078796194,5.20948615284142,7.05012252026906,8.03851202097681,5.09375020080676,6.65929391968364,9.15090878820676,9.77343581317585,7.18235211188526,7.40427911803727,7.27031288607902,4.76217393479776,7.46508273639955,6.09356977004514,6.10031895202006,9.02087334702136,9.66033260213442,8.73889570493404,5.23110861685459,7.04141166379481,5.4380793089232,7.42595365707754,4.67282883446191,6.84161547647759,4.89034912822175,7.58731050602262,6.94312242281943,7.60190195987517,8.47093980689877,7.99023818572036,5.56068163101553,6.4831073514572,4.38202663467388,6.98007594056176,5.68017260901707,6.89770494312864,5.48063892334199,6.4085287910595,9.83134687475845,9.45821555950958,5.4249500174814,8.91931939825889,8.02289686960146,5.39362754635236,7.46450983463653,8.7059937143079,6.89365635460264,8.9470256559727,4.4188406077966,7.24779258176785,5.58724865840025,7.54062152865715,5.29831736654804,6.68461172766793,6.7990558620588,6.81454289725996,8.9947928972836,8.22389538017882,5.76832099579377,5.74620319054015,5.39362754635236,6.5366915975913,6.10255859461357,6.93731408122368,8.87570644462861,5.86929691313377,9.83338692485664,10.3450274047813,5.80814248998044,8.06714903991011,10.0871831648832,5.34710753071747,8.31458729131958,9.51539565248531,7.22766249872865,5.03043792139244,6.27287700654617,6.57507584059962,9.96251089298559,10.6538417568657,6.15909538849193,8.16990264735914,6.27287700654617,10.5356631761046,6.39024066706535,8.88654741251204,6.87316383421252,7.21303165983487,8.42683075133585,10.5006468236849,10.745851834242,10.9354791538623,7.08757370555797,5.09375020080676,4.24849524204936,10.8857532733338,6.41836493593621,10.0209594286763,9.93304635477767,5.56068163101553,9.66605489648747,6.21460809842219,10.3857902373689,9.85392959368778,7.98378106897745,10.9699553656554,4.9416424226093,9.3048320350689,5.66988092298052,6.10924758276437,8.61122983334262,10.2028140645826,10.4338804817946,5.48063892334199,7.50108212425987,8.77446760144659,7.33432935030054,5.19295685089021,4.63472898822964,10.6392870515511,6.94601399109923,6.12249280951439,10.9299054216558,7.57916796739608,10.9463756924319,9.6861396738185,7.36391350140582,10.3543083623651,10.4653294323181,6.68461172766793,8.94441979130591,6.42971947803914,5.75890177387728,6.62539236800796,4.78749174278205,6.51025834052315,6.15909538849193,7.50439155916124,8.19284713459287,6.56103066589657,10.8172952041165,5.97380961186926,6.68085467879022,7.25063551189868,7.11801620446533,10.5340942146212,4.99043258677874,6.36302810354046,7.06987412845857,10.9241382997345,10.5136606498028,7.10167597161944,7.65444322647011,6.40357419793482,7.48549160803075,6.63725803128446,7.40123126441302,4.53259949315326,5.03043792139244,10.1560733944503,6.00881318544259,7.22037383672395,5.8406416573734,8.0684029585697,4.4188406077966,8.70731756027321,5.78689738136671,8.69684451965431,5.74939298590825,8.30028018985266,6.59714570188665,10.625319366956,6.58063913728495,6.79794041297493,7.05444965813294,5.71373280550937,5.4380793089232,5.36129216570943,9.14494815320913,10.0060441408612,7.23489842031483,5.19295685089021,7.12528309151071,6.01615715969835,6.29710931993394,9.30665005211359,8.41958036254924,8.04044688130311,8.90693533905917,10.023933705612,8.3790798892866,6.29710931993394,5.71373280550937,7.77863014732581,7.71110125184016,8.84303772496237,7.80098207125774,7.87321705486274,5.23110861685459,8.78416222227048,5.09375020080676,5.26269018890489,4.49980967033027,7.52994337060159,5.49306144334055,7.79028238070348,5.09375020080676,10.2235763099068,4.63472898822964,9.10230962776248,10.8696916271023,8.6909784171879,5.13579843705026,7.17548971362422,5.46806014113513,4.53259949315326,7.70436116791031,5.65599181081985,10.0930329544194,4.9416424226093,8.39094946484199,5.82008293035236,8.05737748855799,4.20469261939097,8.90666440977005,4.57471097850338,8.49494758246892,6.06378520868761,8.97411158111332,10.5731613769104,10.2366328323977,4.20469261939097,5.99146454710798,6.67959918584438,10.8250244989302,4.99043258677874,8.57376254290413,10.8909261655284,6.59850902861452,5.29831736654804,7.14519613499717,4.14313472639153,8.15334975799889,4.20469261939097,6.82110747225646,4.20469261939097,6.78671695060508,8.8439036508355,10.6958237441351,5.77765232322266,6.3750248198281,7.44424864949671,9.80045765221658,6.24027584517077,6.29156913955832,10.5084863463194,6.33859407820318,7.44833386089748,7.52940645783701,7.7367436824535,5.56068163101553,6.17378610390194,5.20948615284142,7.87321705486274,6.50578406012823,7.11476944836646,6.04973345523196,6.69332366826995,5.24702407216049,6.3456363608286,6.43454651878745,6.96508034560141,6.94022246911964,7.30518821539304,10.661719979752,10.1214577450763,7.68340368105383,7.53955882930103,9.79311449505476,8.5972974356579,7.84698098213879,7.028201432058,5.36129216570943,6.44094654063292,6.06610809010375,5.39362754635236,5.78689738136671,6.62539236800796,5.90536184805457,5.64544689764324,6.13988455222626,5.4380793089232,6.24027584517077,6.06378520868761,7.9229859587112,5.79909265446053,6.5510803350434,6.25382881157547,6.51767127291227,5.97380961186926,7.60489448081162,7.76514490293613,10.3089193267554,10.0953055418074,8.42945427710823,6.09582456243222,6.63725803128446,8.81135422996573,6.42324696353352,7.77485576666552,8.14438886554762,8.01035958891978,6.35088571671474,10.4804660187216,10.4269430822021,7.350516171834,7.90838715929004,5.46806014113513,8.15248607578024,7.95085485771999,7.13169851046691,6.91869521902047,5.03043792139244,5.41164605185504,6.44094654063292,7.72885582385254,6.92362862813843,6.37842618365159,7.22329567956231,9.35996654593199,9.08364271893022,6.60258789218934,4.84418708645859,5.89440283426485,6.42971947803914,6.80239476332431,5.82008293035236,7.36391350140582,10.2653490231622,9.53632927858255,8.85865296954849,7.87359778968554,6.4723462945009,5.92958914338989,6.05443934626937,8.70151275087287,7.90248743716286,10.1928307445645,10.1076929028301,7.98275770201111,5.4510384535657,6.91373735065968,5.31320597904179,8.74305305022468,6.85646198459459,6.20050917404269,7.06731984865348,9.72460009247646,10.2676444368226,7.85321638815607,5.76832099579377,6.69208374250663,7.25347038268453,6.32256523992728,8.46210322509828,8.27995071572253,5.29831736654804,6.79570577517351,7.33953769540767,6.63594655568665,9.94625964438,10.1502304361007,9.42100640177928,9.15239341202133,6.97914527506881,7.21376830811864,8.7702838190984,9.60285532090872,8.24354550792826,8.54888563814873,8.4767877767812,9.16617947619041,6.86901445066571,9.78678518274999,10.3443198044816,9.18183801150347,6.18620862390049,8.24643378616036,4.34380542185368,7.0647590277918,6.5410299991899,5.72358510195238,9.36503376165266,7.13409372119287,9.0300168178449,6.21260609575152,7.24850407237061,7.36897040219479,5.64897423816121,5.23110861685459,4.46590811865458,4.67282883446191,4.53259949315326,5.26269018890489,7.35883089834235,5.82894561761021,5.62401750618734,5.11799381241676,7.70436116791031,4.4188406077966,7.96380795323145,9.26141364216018,6.80682936039218,8.75052466911794,5.48063892334199,4.4188406077966,4.9416424226093,6.97541392745595,6.47543271670409,7.58375630070711,5.62401750618734,8.59951023390545,6.37842618365159,8.41493895737748,4.67282883446191,6.32256523992728,5.4249500174814,6.60934924316738,6.73340189183736,8.98994304633,6.59714570188665,7.18690102041163,10.76568106269,9.67602217557289,6.52795791762255,6.81563999007433,5.58724865840025,9.70100410391822,9.14718755697091,6.21460809842219,5.15329159449778,6.94505106372583,5.76832099579377,8.96724918285228,4.4188406077966,5.57215403217776,4.14313472639153,5.54907608489522,5.75574221358691,7.29165620917446,4.49980967033027,7.52402141520612,8.36287583103188,6.93049476595163,7.20266119652324,4.96284463025991,4.24849524204936,4.53259949315326,10.6848516331507,6.80572255341699,6.3578422665081,10.2975546156919,8.26821888006751,9.51103738150546,9.53618490552231,4.99043258677874,6.69703424766648,5.36129216570943,8.80372460211062,6.82110747225646,9.18461222340345,4.72738781871234,4.96284463025991,5.82894561761021,6.92657703322272,5.74620319054015,9.25378292981902,6.20253551718792,4.49980967033027,8.17131687471973,9.00773447187952,5.96614673912369,6.92067150424868,5.7268477475872,9.50181524266666,6.27476202124194,4.34380542185368,9.56022229550731,5.84643877505772,9.63841462294521,9.41857331055464,6.80903930604298,6.64768837356333,6.19440539110467,9.57038961655386,9.13852208418571,6.25382881157547,7.52131798019924,6.48768401848461,6.96602418710611,10.2620357408565,10.3018273357017,7.21007962817079,7.14440718032114,8.01201823915906,6.90775527898214,10.5711374218225,9.90458694797943,5.13579843705026,4.67282883446191,8.71522404191537,5.49306144334055,9.81782072577904,10.026545540028,8.19505769089508,8.65312170864048,6.11589212548303,9.78599822374366,9.05543941075822,9.65400002588232,9.93154036697816,4.99043258677874,4.9416424226093,10.0109957602514,9.63887075301534,6.30991827822652,10.0232242341385,8.25114213909075,7.00306545878646,10.0687477214018,5.61312810638807,7.00306545878646,10.1147204528245,9.96796357172049,6.27852142416584,7.7621706071382,7.79729127354747,6.50727771238501,5.59842195899838,5.77765232322266,5.4380793089232,7.6275443904885,5.49306144334055,6.58892647753352,6.29710931993394,11.003432286438,10.1657364257309,5.79909265446053,4.969813299576,11.7331367213066,11.2709812841923,5.28320372873799,6.72982407048948,8.55756708555451,6.68085467879022,7.73805229768932,8.02617019494643,9.16072987142285,6.20253551718792,7.27447955877387,11.5748386679739,7.0475172213573,6.37331978957701,10.6689786502192,6.3456363608286,7.18083119904456,6.68959926917897,11.2748966761566,10.8733380085046,6.82871207164168,6.41836493593621,8.88779076419533,4.29045944114839,10.9402776275609,6.74523634948436,9.90303745125574,11.143295537871,7.77695440332244,8.26770566476243,4.57471097850338,10.540011470155,7.7621706071382,5.65948221575962,6.71417052990947,6.35088571671474,7.34342622914737,5.82008293035236,6.84587987526405,8.62873456614915,5.65599181081985,11.4399460864842,10.5954089821703,7.24064969425547,6.46614472423762,4.4188406077966,5.39362754635236,8.57828829077605,4.57471097850338,5.99893656194668,7.29233717617388,6.80572255341699,5.84932477994686,4.67282883446191,6.43454651878745,5.39362754635236,5.93224518744801,7.10167597161944,8.06463647577422,6.3919171133926,9.13118893367075,7.59538727885397,6.24027584517077,9.4744723039677,5.63478960316925,9.37347910935336,7.05012252026906,7.63094658089046,7.02642680869964,9.91891789019149,6.80239476332431,10.7922426342158,11.1915211351134,7.19518732017871,5.07517381523383,7.06817200038804,10.8473739960011,4.63472898822964,10.2757060668507,4.24849524204936,9.72196567591274,11.5787132055082,7.28619171470238,7.00669522683704,10.7447314100874,5.82894561761021,5.8664680569333,11.3729044890488,10.2165814910486,7.46106551435428,9.23161250725172,7.35436233042148,6.78332520060396,5.7268477475872,4.86753445045558,6.92067150424868,5.8664680569333,10.1948503428275,10.1098511038103,5.85793315448346,5.91350300563827,6.42971947803914,4.78749174278205,10.7591786622814,10.3589484469655,6.27287700654617,10.8000040567108,10.4628175853617,7.88833450073865,7.61283103040736,6.20859002609663,4.9416424226093,4.57471097850338,10.5359819858841,10.5124647700093,7.25134498337221,4.57471097850338,8.14496941708788,5.68017260901707,11.1178066489162,10.3054799711653,5.15329159449778,9.26321741157695,7.23777819192344,6.22653666928747,5.19295685089021,10.8195782644076,10.5732125631516,5.80814248998044,5.01063529409626,10.5772735083674,10.0559510956626,5.92958914338989,5.4380793089232,8.06652149046999,7.49164547360513,6.93634273583405,5.17614973257383,5.68017260901707,10.7035140947232,10.8530002719012,10.80073816633,10.716282695362,10.6105622927935,10.9824751980679,8.59081533128685,7.00033446027523,7.70796153183549,7.90948949267376,7.20711885620776,5.73657229747919,5.26785815906333,9.65547481054255,4.4188406077966,8.05420489706441,5.7037824746562,8.16479480424477,4.96284463025991,6.29156913955832,4.72738781871234,4.4188406077966,4.29045944114839,7.68109900153636,5.29831736654804,8.90095787254506,6.34035930372775,7.82164312623998,5.4380793089232,10.3260064152101,6.59714570188665,6.48004456192665,4.91998092582813,7.10085190894405,5.78996017089725,6.07304453410041,4.96284463025991,7.56268124672188,6.17378610390194,5.54907608489522,4.46590811865458,6.82437367004309,6.34035930372775,4.91265488573605,4.38202663467388,5.6937321388027,4.70048036579242,6.31716468674728,7.13568734702814,5.8171111599632,6.32793678372919,6.3456363608286,4.91998092582813,4.34380542185368,6.82546003625531,6.19440539110467,6.41017488196617,8.02682357621763,5.37527840768417,5.11799381241676,4.76217393479776,5.8111409929767,7.03262426102801,5.36129216570943,8.146419323098,6.08677472691231,4.49980967033027,9.28451977015043,5.65948221575962,7.84932381804056,4.38202663467388,7.71824095195932,4.81218435537242,7.87625888230323,6.29156913955832,7.64108424917491,8.7787879291047,6.78332520060396,4.38202663467388,6.67708346124714,6.65544035036765,7.40671073017764,5.62401750618734,7.60140233458373,4.29045944114839,8.35113860708615,7.65964295456468,6.01615715969835,8.82291162635412,4.34380542185368,8.1789193328484,5.33271879326537,5.68017260901707,6.13772705408623,4.38202663467388,9.94549284668116,6.84587987526405,5.8171111599632,4.38202663467388,5.24702407216049,6.3750248198281,6.32076829425058,5.82008293035236,6.33327962813969,6.42648845745769,8.26204284396694,5.05624580534831,7.12286665859908,6.55961523749324,7.82644313545601,4.24849524204936,5.20948615284142,7.05875815251866,7.39878627541995,5.89440283426485,7.1066061377273,6.50279004591562,4.76217393479776,9.34171945003256,5.40717177146012,7.29641326877392,6.07764224334903,6.88857245956536,5.20948615284142,10.815629690884,9.0896405867384,8.72111314776269,6.42486902390539,7.31388683163346,8.11671562481911,6.02586597382531,8.35725915349991,6.47697236288968,7.60986220091355,8.10500553754725,8.21554741194707,6.88755257166462,10.3406128133722,5.7037824746562,9.48857798559996,5.09375020080676,8.30028018985266,6.58340922215876,9.6621163829476,8.31016902198191,9.55994034724295,5.26269018890489,6.2841341610708,8.5131851700187,7.94661756324447,5.98393628068719,5.57215403217776,7.82803803212583,7.02375895473844,5.80814248998044,4.38202663467388,9.92275081871697,5.54907608489522,9.22256534159875,5.01063529409626,7.60887062919126,5.05624580534831,9.10475760940112,6.68461172766793,8.08085641964099,10.9882037360121,8.8989119057944,9.57775741244682,6.42971947803914,7.58882987830781,5.40717177146012,8.86021535890118,8.53915035876828,6.04025471127741,6.01615715969835,7.7393592026891,7.67554600253785,7.39018142822643,9.54122560172861,9.02087334702136,5.97380961186926,8.73777346032728,4.89034912822175,10.2886479073039,4.14313472639153,9.15904707758863,5.84932477994686,6.39859493453521,7.60389796852188,4.96284463025991,5.05624580534831,4.29045944114839,6.42971947803914,5.29831736654804,7.31588350450979,6.82654522355659,8.20821938349683,6.70563909486,5.4510384535657,5.32787616878958,7.98309894071089,8.31090675716845,5.57594910314632,7.22256601882217,5.31320597904179,7.54009032014532,8.31090675716845,4.81218435537242,7.6251071482389,4.89034912822175,4.46590811865458,4.96284463025991,6.21460809842219,7.65775527113487,9.07829359105585,4.24849524204936,6.78671695060508,6.5366915975913,4.46590811865458,9.24445190160495,6.78105762593618,7.98582466641892,7.58731050602262,5.13579843705026,10.2620706747442,7.40610338123702,5.03043792139244,6.36302810354046,5.54907608489522,5.17614973257383,6.34035930372775,8.78523362361273,8.45531778769815,7.30586003268401,6.42486902390539,7.85941315469358,7.40000951716269,7.61579107203583,4.53259949315326,7.70029520342012,6.92165818415113,10.1484708704548,9.13227071655426,7.73455884435476,5.17614973257383,5.13579843705026,6.07073772800249,5.53338948872752,6.87005341179813,9.12008738299862,7.66387725870347,8.47782846789396,9.42802907260743,6.80572255341699,9.19238004659919,6.16541785423142,8.41715183723601,6.09356977004514,7.93880224815448,5.59842195899838,4.76217393479776,6.45990445437753,6.67708346124714,6.43454651878745,7.00669522683704,6.32256523992728,6.79234442747081,6.23441072571837,5.74620319054015,10.0114895763374,5.33271879326537,8.36846113761584,4.24849524204936,9.1557785839835,5.59842195899838,6.90073066404517,4.60517018598809,7.09589322109753,10.4078926084992,7.37775890822787,6.36302810354046,8.4446224985814,4.38202663467388,7.72223474470961,7.24779258176785,7.13886699994552,4.24849524204936,6.18208490671663,4.84418708645859,5.07517381523383,4.81218435537242,7.40000951716269,10.4772598060527,10.8803652584592,6.33327962813969,4.63472898822964,7.92443418488756,6.82437367004309,6.72982407048948,7.76344638872736,5.74620319054015,6.49677499018586,5.28320372873799,6.60665018619822,8.82408948279182,9.09043007530363,5.01063529409626,6.13772705408623,4.38202663467388,8.0471895621705,5.57215403217776,10.5654794070884,10.1823685085348,4.78749174278205,5.58724865840025,10.8721240247822,10.2340854259647,5.33271879326537,8.97563018429051,4.81218435537242,5.84932477994686,5.57215403217776,10.6778690382276,10.8424004624466,9.32402613638,6.53378883793334,8.98218427883843,10.7112572239328,10.910039375355,5.24702407216049,9.2127374965759,6.95081476844258,9.13432288555227,9.3489712401454,9.62766817262686,5.5834963087817,6.39024066706535,6.5694814204143,11.1274688453203,5.87773578177964,7.4899708988348,11.2865642425,9.65502619323763,5.79909265446053,9.72088595653485,6.98193467715639,6.71295620067707,8.79905837854645,5.82008293035236,10.9181746062665,10.5066006103921,4.29045944114839,9.73216528120054,9.55824698622306,6.97634807044775,9.40434343354397,7.67554600253785,4.46590811865458,6.57088296233958,8.31483217928456,10.3366650992108,9.62092568277892,9.27481639116055,7.42416528104203,5.84932477994686,9.48683509878739,11.2207265402159,10.5685181403702,8.56788630573176,7.1276936993474,9.01030280937645,5.37989735354046,5.52146091786225,7.39387829010776,8.64241515616962,8.94285300680992,6.5410299991899,6.39692965521615,8.78737298873188,6.71295620067707,8.08394570229562,5.54907608489522,6.09356977004514,6.89365635460264,10.2108619520836,9.40640045488065,7.25629723969068,8.61486421858968,8.17385745477362,5.68017260901707,6.7696419768525,4.70048036579242,6.43133108193348,5.23110861685459,6.84268328223842,6.49828214947643,6.48768401848461,5.77765232322266,10.7255335368314,10.5532835010829,10.4750601435752,10.6159470441284,8.56636423542269,8.11939858961229,6.06378520868761,7.38585107812521,6.46146817635372,5.66988092298052,8.09742629859721,5.11799381241676,6.20657592672493,4.96284463025991,6.93731408122368,10.391453882156,10.6123850431873,7.58120982619635,9.65406419220144,7.69393732550927,6.33505425149806,10.9763545914524,10.6254894040002,9.66421461929203,7.16317239084664,9.65258732417688,6.79794041297493,10.2292237926068,7.30854279753919,9.69134575932969,10.3144375908749,5.71373280550937,10.6643868351469,10.4635318200896,8.84419212624497,6.07073772800249,10.518592107258,10.3572347592623,8.52773740529191,5.24702407216049,5.66988092298052,9.61159663531029,6.07304453410041,9.42488707606487,9.68209247175604,8.20001364817543,6.49072353450251,10.4949065015887,10.1785402085878,8.66475075577385,6.65415252018322,6.00881318544259,10.6896468197605,9.99117786566312,9.99177311740349,7.81762544305337,5.63478960316925,6.86693328446188,10.9061577951478,11.0306063100018,7.84502441724148,6.61204103483309,6.89770494312864,7.36327958696304,7.39079852173568,5.29831736654804,6.25382881157547,6.90073066404517,8.49821422481843,6.61069604471776,5.82894561761021,6.55961523749324,6.3919171133926,5.63478960316925,6.56526497003536,7.53476265703754,6.83087423464618,6.39692965521615,5.78996017089725,7.37148929521428,8.26821888006751,7.65822752616135,7.18311170174328,5.24702407216049,4.96284463025991,5.65948221575962,7.434847875212,5.7268477475872,10.6667436482412,10.3497426546525,7.9755646584952,5.92157841964382,5.78074351579233,6.8501261661455,7.15226885603254,6.76388490856244,6.32256523992728,10.866050996741,11.1753386073898,7.04141166379481,4.91998092582813,6.70196036600254,8.38045666784277,6.13988455222626,10.9852927228879,10.7617889372055,7.26122509197192,11.3322904851055,10.8368517005996,7.23273313617761,5.99146454710798,7.92044650514261,10.5239296226254,10.6759079139906,5.09375020080676,11.0352605629776,11.2841410337659,11.2041831747868,11.42683366504,6.51174532964473,10.8029779457319,11.2491552125648,11.062300843895,10.3916073387665,8.55294636112206,7.20711885620776,5.88610403145016,4.89034912822175,7.09007683577609,7.64683139143048,5.79909265446053,6.96696713861398,10.9507012781182,6.30991827822652,11.5279815511242,9.42116839756774,5.82894561761021,8.38548870041881,10.0746638982251,9.93348315257151,7.29709100516042,8.29829063435928,9.11040953335113,4.53259949315326,8.24117615049496,5.8171111599632,5.63478960316925,10.8606514776199,10.0440752408458,10.5946577802119,8.4211227226655,4.29045944114839,5.84932477994686,5.26269018890489,11.4417086938792,6.89162589705225,10.5332155852264,5.4510384535657,5.54907608489522,5.56068163101553,5.85793315448346,7.77904864492556,6.30991827822652,11.1949903493474,10.7901022753003,10.7416005033463,9.27921323626256,6.36302810354046,6.81014245011514,9.21323617508821,9.54631248355571,8.67214355141406,8.71653573254449,8.1318247850072,5.92425579741453,10.8362813565093,10.6074262941743,5.40717177146012,10.5549529941014,5.05624580534831,4.9416424226093,5.57215403217776,5.93224518744801,10.9718467980332,6.46614472423762,7.9218984110238,6.61069604471776,6.71780469502369,10.9918811410099,7.36897040219479,6.3578422665081,9.64536428232589,10.2413516771745,6.59714570188665,7.51643330291563,7.79729127354747,6.13772705408623,8.26384813136891,7.95191138185419,5.19295685089021,8.07682603129881,5.7037824746562,5.85793315448346,4.70048036579242,10.8839729964523,7.29709100516042,6.02344759296103,11.3898308478075,4.49980967033027,8.99317889233952,6.83947643822884,5.23110861685459,5.88610403145016,7.17472430983638,10.9388760110372,10.7211506077936,5.03043792139244,8.74369111054302,8.68524677641249,7.06987412845857,8.28601746840476,5.82008293035236,7.38461038317697,5.88610403145016,8.08332860878638,7.87739718635329,4.63472898822964,9.24850283307053,7.74022952476318,7.4713630881871,8.45871626165726,7.77064523412918,6.85646198459459,6.61069604471776,6.88653164253051,6.49223983502047,7.7336835707759,11.4608739884984,5.79909265446053,7.3362856600213,10.0056379211811,6.10255859461357,11.9782933658114,11.6964296555105,7.68753876620163,4.72738781871234,8.606485298895,6.31535800152233,8.99205997632796,6.03068526026126,7.56941179245071,5.46806014113513,10.2236126134773,7.37337430991005,6.52649485957079,6.41836493593621,5.09375020080676,7.09340462586877,5.73657229747919,8.03040956213048,5.82894561761021,5.05624580534831,5.46806014113513,6.07993319509559,6.30261897574491,7.70796153183549,5.03043792139244,8.4213428657594,4.60517018598809,6.29341927884648,5.32787616878958,5.60947179518496,9.27350294693382,5.76832099579377,9.99346535865436,5.07517381523383,9.63639211369274,6.60665018619822,5.58724865840025,7.11639414409346,6.39692965521615,5.07517381523383,5.76832099579377,6.0137151560428,6.15909538849193,4.34380542185368,6.52209279817015,7.15695636461564,5.90536184805457,7.80547462527086,4.70048036579242,8.72566970568704,8.4156033356546,5.46806014113513,6.30809844150953,4.38202663467388,6.65544035036765,10.653251333515,5.98141421125448,5.52146091786225,4.38202663467388,6.30991827822652,4.34380542185368,5.78996017089725,5.46806014113513,4.38202663467388,4.38202663467388,5.71373280550937,5.46383180502561,6.30991827822652,8.18562889114761,9.01396045793119,7.46393660446893,5.89440283426485,4.49980967033027,7.53849499941346,8.59452453435256,5.53733426701854,5.79909265446053,7.36010397298915,5.4380793089232,8.86545282575362,4.67282883446191,8.3485378253861,5.09375020080676,10.6005769523287,6.16541785423142,8.13798045445214,4.34380542185368,4.89034912822175,4.91998092582813,4.14313472639153,6.09356977004514,6.93925394604151,8.67351294567119,6.50279004591562,7.36327958696304,6.58479139238572,8.0727793331695,5.48063892334199,5.65599181081985,5.03043792139244,7.72709448477984,5.54907608489522,4.89034912822175,4.14313472639153,6.3578422665081,5.24702407216049,6.73696695800186,5.2257466737132,7.11476944836646,5.23110861685459,7.09506437728713,4.20469261939097,7.89245204352035,4.81218435537242,7.71467747380093,5.13579843705026,7.75662333453886,4.67282883446191,7.26262860097424,6.25382881157547,7.46336304552002,6.4085287910595,7.26122509197192,5.78689738136671,7.98344006300654,6.86693328446188,4.63472898822964,6.13122648948314,4.67282883446191,7.28138566357028,4.20469261939097,6.39859493453521,6.85329909318608,5.19295685089021,4.81218435537242,6.72503364216684,10.9649862398641,7.19067603433221,5.01063529409626,7.38025578842646,4.53259949315326,6.29710931993394,5.4510384535657,7.44483327389219,6.32256523992728,9.00196227286245,5.11799381241676,7.43248380791712,6.38687931936265,6.47697236288968,4.38202663467388,7.94555542825349,5.79909265446053,9.54945197953433,4.34380542185368,7.94413749111411,4.76217393479776,6.94793706861497,7.60787807327851,4.34380542185368,4.70048036579242,6.99942246750796,5.09375020080676,7.52617891334615,5.96614673912369,4.34380542185368,6.05443934626937,5.4510384535657,4.29045944114839,5.92425579741453,6.23441072571837,5.53338948872752,4.86753445045558,4.78749174278205,9.73878978049572,6.71174039505618,4.4188406077966,6.19440539110467,5.19295685089021,5.73657229747919,5.94017125272043,5.09375020080676,4.49980967033027,5.03695260241363,6.15909538849193,6.32256523992728,5.74939298590825,6.46146817635372,6.1527326947041,4.49980967033027,6.1463292576689,4.38202663467388,5.52146091786225,7.05703698169789,5.7268477475872,6.92165818415113,9.7880767010248,7.03262426102801,4.96284463025991,5.84932477994686,7.19067603433221,10.4928840321021,7.36454701425564,4.4188406077966,6.17170059741091,5.09375020080676,8.47324130388705,6.07073772800249,6.68085467879022,7.54380286750151,10.7310782096456,7.88382321489215,6.3919171133926,7.98070782086967,5.75890177387728,7.05012252026906,4.34380542185368,4.57471097850338,8.00202481821611,7.26822302115957,5.40717177146012,7.76174498465891,7.21597500265147,9.04923221158143,4.53259949315326,6.57507584059962,5.64544689764324,4.9416424226093,5.82008293035236,6.12468339089421,7.37337430991005,6.3578422665081,10.2277787578552,9.6497559194539,10.6853093095357,6.67959918584438,5.54907608489522,7.36327958696304,7.13089883029635,5.03043792139244,7.63675211243578,4.67282883446191,6.68835471394676,6.54678541076052,4.60517018598809,5.17614973257383,5.15329159449778,4.34380542185368,6.19440539110467,4.38202663467388,10.4303735158486,5.57215403217776,7.50384074669895,5.68017260901707,8.54907938027856,7.92948652331429,7.24850407237061,5.15329159449778,10.4381957047654,7.20042489294496,4.29045944114839,6.59304453414244,5.78996017089725,7.78862606562503,7.17548971362422,4.34380542185368,9.08023168662916,4.49980967033027,6.65027904858742,7.02731451403978,6.16751649088834,8.10319175228579,5.62401750618734,6.35088571671474,4.29045944114839,7.5207764150628,6.68085467879022,7.16703787691222,6.58340922215876,6.52795791762255,6.16751649088834,5.58724865840025,4.34380542185368,9.2140335438138,5.66988092298052,8.60739945930239,4.57471097850338,5.77765232322266,5.52146091786225,9.78886202669482,8.30523682949259,9.79823814181703,5.8664680569333,9.79540110267107,7.54274354536855,7.62559507213245,7.41457288135059,6.00881318544259,5.95064255258773,7.9665866976384,6.25958146406492,7.77191025643576,6.69703424766648,4.53259949315326,5.15329159449778,8.34212526333359,4.34380542185368,4.49980967033027,5.23110861685459,9.60582248203094,5.57215403217776,9.07680897935166,4.81218435537242,6.50727771238501,8.34474275441755,5.89440283426485,7.05444965813294,5.09375020080676,7.85941315469358,5.28320372873799,5.4249500174814,5.15329159449778,6.92755790627832,7.95472333449791,5.94803498918065,7.44833386089748,6.07073772800249,7.24136628332232,5.83773044716594,6.99393297522319,5.57215403217776,9.11327865913305,4.53259949315326,4.96284463025991,6.30991827822652,5.34710753071747,8.70267641154777,10.4092503819597,7.90801944463247,7.24494154633701,8.19146305132693,4.89034912822175,7.00306545878646,7.17011954344963,5.03043792139244,5.77765232322266,7.52671756135271,4.38202663467388,6.1779441140506,10.9995632935522,10.4144831618538,6.64639051484773,7.43661726523423,6.62007320653036,7.1929342212158,5.01063529409626,6.07073772800249,5.68017260901707,6.52209279817015,6.83195356556585,4.34380542185368,4.49980967033027,4.14313472639153,6.59714570188665,6.11589212548303,6.12468339089421,8.0245348716057,5.60947179518496,8.36287583103188,5.66988092298052,7.58273848891441,5.80814248998044,6.57786135772105,8.07246736935477,4.81218435537242,8.04782935745784,4.70048036579242,7.90248743716286,6.68461172766793,8.18339736999843,5.29831736654804,6.53233429222235,5.68017260901707,7.78364059622125,6.33327962813969,9.64309634032025,4.49980967033027,7.49164547360513,6.30261897574491,5.4553211153577,7.51534457118044,6.80239476332431,5.11799381241676,7.6246189861594,4.89034912822175,7.20785987143248,5.53338948872752,4.89034912822175,6.97634807044775,5.26269018890489,5.65948221575962,5.4510384535657,4.81218435537242,6.89568269774787,8.02975852044082,5.83773044716594,7.05358572719368,4.14313472639153,5.79909265446053,7.87663846097546,10.333222419617,5.39362754635236,8.11552088154677,4.9416424226093,6.80128303447162,7.6685611080159,9.36751504634818,10.8999932849329,7.21303165983487,7.89655270164304,5.36129216570943,8.63533171943328,6.98471632011827,7.15383380157884,8.38958706681109,7.86978390253015,8.55371796609861,8.67299964255444,5.93224518744801,5.7037824746562,6.59304453414244,6.95654544315157,5.69035945432406,6.39692965521615,5.11799381241676,7.04925484125584,7.41034709782102,10.9207451621614,10.8649619529856,7.83201418050547,5.29831736654804,6.13122648948314,6.04025471127741,6.30261897574491,4.14313472639153,6.75809450442773,5.4249500174814,6.86797440897029,6.25382881157547,6.16541785423142,6.07993319509559,6.41345895716736,4.84418708645859,11.0632419761465,7.59135704669855,9.05239918390761,10.7121930737303,5.92157841964382,10.6332077133269,11.3568796571885,7.83715965000168,7.51752085060303,6.13772705408623,6.27287700654617,7.52833176670725,6.56103066589657,6.09356977004514,5.82008293035236,6.87419849545329,7.46908388492123,6.32256523992728,6.67203294546107,7.39510754656249,8.21256839823415,5.84643877505772,5.63478960316925,4.86753445045558,5.4380793089232,6.45204895443723,5.60947179518496,6.53233429222235,5.87773578177964,7.26262860097424,8.35537989525363,9.03836510723637,9.49416501410066,5.91350300563827,6.25382881157547,8.94923531437485,8.44548234386224,8.1146238864201,6.96034772910131,6.51174532964473,7.98684490116138,4.70048036579242,6.05678401322862,7.80098207125774,6.84906628263346,7.8935720735049,6.07993319509559,5.34710753071747,9.03753340755081,9.93750243112341,5.76832099579377,4.70048036579242,9.30501398557886,9.50017013648542,4.4188406077966,8.55043452519604,9.33961270768032,5.76832099579377,5.96614673912369,6.7202201551353,10.0647557001323,7.42237370098682,9.39040939371684,10.647161483764,9.73672433465803,9.50031980347665,7.01750614294126,5.76832099579377,8.03495502450216,10.472827337368,4.76217393479776,5.15329159449778,7.54168309988211,6.72503364216684,10.9354969685363,7.80751004221619,7.1276936993474,5.36129216570943,6.00881318544259,6.85646198459459,8.81209910895734,4.53259949315326,10.6201070892812,10.9387694788377,7.02997291170639,4.84418708645859,5.95583736946483,5.7037824746562,10.6771311839664,8.81611189579401,10.1401394538751,9.84033473753086,9.53971605897709,7.83991936001258,8.09864284375942,10.3464411050553,7.57353126274595,6.11589212548303,7.90617884039481,9.03097444300786,8.95751051029161,8.92345797969497,7.04490511712937,7.1731917424866,9.49446612822519,10.7545355153483,10.0566377320298,6.5366915975913,9.44367184967788,7.83161727635261,8.95544812234739,9.97231374346598,4.84418708645859,7.05617528410041,8.91918561004543,7.1693500166706,8.53464010501996,7.49554194388426,10.3696400255904,10.2856840337049,8.14467918344776,5.69035945432406,10.2292959895602,9.29458960442108,6.41836493593621,6.63331843328038,7.68294316987829,6.56103066589657,8.31164394850298,8.0702808933939,6.97354301952014,7.20117088328168,8.26590733415575,7.46336304552002,7.95402108727804,8.05801080080209,10.7874616829337,10.4756246210252,4.81218435537242,7.67832635650689,5.82894561761021,6.56103066589657,6.81783057145415,5.11799381241676,5.23110861685459,5.53338948872752,6.49828214947643,6.31535800152233,5.17614973257383,5.80814248998044,6.00635315960173,5.66988092298052,5.37989735354046,5.96614673912369,8.17103418920548,10.4709581250081,6.18826412308259,8.99788945020072,7.49387388678356,10.6209614250871,5.87773578177964,10.8971651607916,10.9280046894812,7.67600993202889,9.29651806821724,6.5510803350434,11.2637458991052,9.88083286197132,11.7898296642501,6.48157712927643,9.37203396097417,9.62264867856634,8.42156296040099,6.94697599213542,9.19674841845672,8.6569551337914,6.62406522779989,6.54534966033442,6.04025471127741,5.8664680569333,11.2290494735274,11.5309912907819,6.07993319509559,6.91473089271856,7.68432406768116,9.57338498642259,4.96284463025991,6.2803958389602,8.07402621612406,5.6937321388027,8.07682603129881,8.28576542051433,4.53259949315326,6.3919171133926,10.5859781529075,10.8018790445225,6.59850902861452,7.11395610956603,7.60439634879634,6.96508034560141,6.05443934626937,8.73600738456922,10.1302644950828,9.75115189123243,8.15651022607997,6.96602418710611,5.85793315448346,6.8351845861473,6.7900972355139,6.67959918584438,10.9557766315986,5.59842195899838,5.92425579741453,5.93224518744801,10.8786507839212,10.8681302573499,6.75576892198425,9.33617975681533,7.98344006300654,6.74523634948436,4.60517018598809,6.49677499018586,7.35244110024358,7.62119516280984,7.56579328242851,7.7553388128465,6.25382881157547,7.75790620835175,5.99396142730657,5.46806014113513,7.35179986905778,7.27239839257005,5.7037824746562,11.2156239402523,6.73459165997295,6.10255859461357,11.0364851647323,9.52281261323518,7.92443418488756,7.38647084882989,9.25751017645257,7.71154897962915,6.19440539110467,5.34710753071747,5.98141421125448,7.59438124255182,11.0232903937753,5.94017125272043,7.64587582518481,6.70808408385307,6.11809719804135,11.1387555148428,7.32118855673948,6.53087762772588,9.55265266507908,5.88610403145016,10.8208577016298,11.3086404680046,4.9416424226093,8.54985397365579,9.45250192912615,5.15905529921453,6.41999492814714,11.0052615045418,10.8644265494124,9.92063961135369,7.9585769038139,6.28785856016178,10.5196456915679,7.86365126544865,6.09356977004514,9.50703173858555,7.50878717063428,10.4750601435752,8.48776438072542,7.2283884515736,5.92157841964382,6.20050917404269,6.91473089271856,10.3833486865446,10.5180243325142,6.33505425149806,8.5387589693308,7.82364593083495,5.07517381523383,9.05497228474248,10.8712315659992,10.1117610911836,6.02586597382531,5.95583736946483,6.68461172766793,10.8580562456203,4.67282883446191,9.40112600718227,9.61640530015631,7.22766249872865,5.90536184805457,6.91869521902047,10.0862256892153,7.2152399787301,5.20948615284142,10.6290293165319,7.64060382639363,7.743269700829,11.0134683079414,8.46337038471873,8.05006542291597,8.2398574110186,4.29045944114839,7.54274354536855,10.6436375866914,9.32322252903286,8.00803284696931,10.8057807747664,8.85723049420195,5.11799381241676,7.71556953452021,6.18826412308259,10.4028072361159,10.1204922998909,7.27931883541462,9.12467354521909,8.43533216493592,7.23993259132047,10.650459819791,9.41368929596221,9.81602152675485,7.00033446027523,10.1018054824147,7.67832635650689,8.84202652949881,6.46146817635372,6.79570577517351,6.83195356556585,6.95081476844258,10.8248454247433,5.07517381523383,6.39526159811545,6.37331978957701,6.34035930372775,10.6625395079838,7.6657534318617,4.57471097850338,5.29831736654804,7.1276936993474,10.9958134565615,10.6355669430979,8.46104603079324,5.71702770140622,5.4380793089232,6.17378610390194,7.07665381544395,6.3919171133926,6.97260625130175,9.31892568238962,4.24849524204936,9.49009107410168,4.57471097850338,7.46565531013406,4.96284463025991,7.86901937649902,4.38202663467388,8.3786205415523,5.39362754635236,4.4188406077966,5.28320372873799,4.29045944114839,4.38202663467388,6.02344759296103,5.4249500174814,8.43879912398823,6.67708346124714,5.48063892334199,4.78749174278205,6.3818160174061,5.29831736654804,7.13886699994552,5.29831736654804,5.52146091786225,6.10924758276437,6.16541785423142,4.4188406077966,5.90536184805457,5.23110861685459,6.21460809842219,4.91998092582813,8.20930841164694,4.24849524204936,6.20657592672493,8.43793351043061,4.76217393479776,6.72142570079064,5.80814248998044,7.04577657687951,5.66988092298052,4.49980967033027,5.50938833662798,4.81218435537242,5.19295685089021,4.99043258677874,5.09375020080676,4.4188406077966,7.00850518208228,5.40717177146012,7.29573507274928,6.1463292576689,5.99146454710798,4.63472898822964,4.46590811865458,5.62401750618734,6.69208374250663,6.68336094576627,5.63478960316925,6.80682936039218,5.75890177387728,6.23441072571837,5.75574221358691,6.38519439899773,6.17378610390194,5.03043792139244,6.88346258641309,7.0604763659998,5.72358510195238,6.79122146272619,6.58479139238572,4.60517018598809,4.49980967033027,6.71659477352098,6.9037472575846,5.03043792139244,5.93224518744801,6.85224256905188,6.93147180559945,5.63478960316925,6.7990558620588,7.28619171470238,6.24222326545517,6.88448665204278,6.97166860472579,6.06378520868761,5.53338948872752,6.82871207164168,5.17614973257383,6.57088296233958,5.20948615284142,6.15909538849193,6.2803958389602,6.81783057145415,4.9416424226093,4.46590811865458,6.3578422665081,4.49980967033027,5.4380793089232,4.49980967033027,5.01063529409626,5.19295685089021,6.39692965521615,5.54907608489522,5.53338948872752,5.29831736654804,5.19295685089021,6.12249280951439,5.05624580534831,4.34380542185368,5.4249500174814,4.76217393479776,6.13988455222626,5.01063529409626,5.15329159449778,7.89506349809157,4.24849524204936,5.33271879326537,4.76217393479776,4.91998092582813,5.23110861685459,5.94017125272043,5.26269018890489,5.19295685089021,6.47697236288968,6.93439720992856,6.00881318544259]],"container":"<table class=\"display\">\n <thead>\n <tr>\n <th> <\/th>\n <th>Partic<\/th>\n <th>Compar<\/th>\n <th>AOI_Type<\/th>\n <th>Dur_Seconds<\/th>\n <th>Dur_Log<\/th>\n <\/tr>\n <\/thead>\n<\/table>","options":{"pageLength":8,"columnDefs":[{"targets":5,"render":"function(data, type, row, meta) {\n return type !== 'display' ? data : DTWidget.formatRound(data, 3, 3, \",\", \".\");\n }"},{"targets":1,"render":"function(data, type, row, meta) {\nreturn type === 'display' && data.length > 5 ?\n'<span title=\"' + data + '\">' + data.substr(0, 6) + '...<\/span>' : data;\n}"},{"className":"dt-right","targets":[1,4,5]},{"orderable":false,"targets":0}],"order":[],"autoWidth":false,"orderClasses":false,"lengthMenu":[8,10,25,50,100]},"callback":"function(table) {\ntable.page(1).draw(false);\n}"},"evals":["options.columnDefs.0.render","options.columnDefs.1.render","callback"],"jsHooks":[]}</script> --- class: inverse-green, center, middle name: add_complexity ## 6. Let's slightly increase complexity .footnote[[<i> get me back to the topic slide</i>](#topicslide)] --- ## Question 1 .UA-red[<i><b> How much time do people spend in a text (in total) when comparing two texts? </i></b>] A simple model could look like: $$ log(Time_i) \sim N(\mu,\sigma_e) $$ with `\(Time_i\)` being the total time for each combination of participant, comparison and AOI_type .small[ ```r Durations_CJ %>% group_by(Partic, Compar, AOI_Type) %>% summarize( Dur_Seconds = mean(Dur_Seconds), Dur_Log = mean(Dur_Log) ) %>% head(5) ``` ``` ## # A tibble: 5 x 5 ## # Groups: Partic, Compar [3] ## Partic Compar AOI_Type Dur_Seconds Dur_Log ## <dbl> <chr> <chr> <dbl> <dbl> ## 1 1 DHH 214-425 AOI_LEFT_TEXT 7.12 6.61 ## 2 1 DHH 214-425 AOI_RIGHT_TEXT 15.3 8.55 ## 3 1 DLL 508- 51 AOI_LEFT_TEXT 4.61 7.31 ## 4 1 DLL 508- 51 AOI_RIGHT_TEXT 3.28 6.63 ## 5 1 DMH 414-609 AOI_LEFT_TEXT 1.93 6.82 ``` ] --- ## Question 1 .UA-red[<i><b> How much time do people spend in a text (in total) when comparing two texts? </i></b>] A simple model could look like: $$ log(Time_i) \sim N(\mu,\sigma_e) $$ ---- .UA-red[<b> .left-column[.Super[🏃] ] .right-column[ - Try it yourself and run the model - Inspect convergence - Interpret the results </b> ] ] --- ## Question 1 First we make a dataset ```r Data_CJ1 <- Durations_CJ %>% group_by(Partic, Compar, AOI_Type) %>% summarize( Dur_Seconds = mean(Dur_Seconds), Dur_Log = mean(Dur_Log) ) ``` Then we can run the model ```r Model1_CJ <- brm( Dur_Log ~ 1, data = Data_CJ1, family = "gaussian", backend = "cmdstanr", seed = 1975 ) ``` --- ## Question 1 ```r Model1_CJ <- readRDS(here("models", "Model1_CJ.RDS")) summary(Model1_CJ) ``` ``` ## Family: gaussian ## Links: mu = identity; sigma = identity ## Formula: Dur_Log ~ 1 ## Data: Data_CJ1 (Number of observations: 499) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1; ## total post-warmup samples = 4000 ## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## Intercept 7.65 0.06 7.54 7.76 1.00 3411 2340 ## ## Family Specific Parameters: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## sigma 1.23 0.04 1.15 1.31 1.00 3446 2663 ## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS ## and Tail_ESS are effective sample size measures, and Rhat is the potential ## scale reduction factor on split chains (at convergence, Rhat = 1). ``` ??? Convergence is clearly ok! --- ## Question 1 ```r plot(Model1_CJ) ``` <img src="Part1_files/figure-html/unnamed-chunk-53-1.png" width="432" /> --- ## Further exploration of the posterior Pull the 4000 samples of our parameter values from the posterior with .UA-red[`posterior_samples()`] ```r posterior_samples(Model1_CJ) %>% head(10) ``` ``` ## b_Intercept sigma Intercept lp__ ## 1 7.64887 1.15629 7.64887 -812.378 ## 2 7.63948 1.21292 7.63948 -810.850 ## 3 7.67498 1.22842 7.67498 -810.910 ## 4 7.59150 1.23610 7.59150 -811.445 ## 5 7.68766 1.24512 7.68766 -811.191 ## 6 7.59683 1.22045 7.59683 -811.293 ## 7 7.69482 1.24441 7.69482 -811.274 ## 8 7.61122 1.19426 7.61122 -811.343 ## 9 7.62011 1.16751 7.62011 -812.042 ## 10 7.61525 1.17405 7.61525 -811.850 ``` .footnote[*with the `head()` function we ask to only show the first 10 rows] --- ## Time to unpack the plotting power of `ggplot2` .center2[ First we set a custom theme that makes our plots look 'better' ```r theme_set(theme_linedraw() + theme(text = element_text(family = "Times"), panel.grid = element_blank())) ``` ] --- ## Time to unpack the plotting power of `ggplot2` .pull-left[ Package .UA-red[`tidybayes`] is a wrapper including functions from .UA-red[`ggdist`] that open a lot of plotting options .footnotesize[ ```r posterior_samples(Model1_CJ) %>% # Get draws of the posterior select(b_Intercept) %>% # Only select our Intercept pivot_longer(everything()) %>% # Rearrange the result to plot ggplot(aes(x = value, y = name)) + # Let's plot... * stat_pointinterval(.width = .89) + # 89% CI xlab("marginal posterior") # Set our x-axis label ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-57-1.png" width="504" /> ] --- ## Your turn .center2[ .UA-red[<b> .left-column[.Super[🏃] ] - Make a similar plot for the estimate of the standard deviation - With a CI of 80% - Interpret the results </b> ] ] --- ## Solution... .pull-left[ .footnotesize[ ```r posterior_samples(Mode1l_CJ) %>% # Get draws of the posterior * select(sigma) %>% # Only select sigma pivot_longer(everything()) %>% # Rearrange the result to plot ggplot(aes(x = value, y = name)) + # Let's plot... * stat_pointinterval(.width = .80) + # 80% CI xlab("marginal posterior") # Set our x-axis label ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-59-1.png" width="504" /> ] --- ## Another type of plot with some more information in it Let's use .UA-red[`stat_interval()`] from the `ggdist` package .pull-left[ .scriptsize[ ```r posterior_samples(Model1_CJ) %>% select(b_Intercept) %>% ggplot(aes(x = b_Intercept, y = 0)) + * stat_interval( * .width = c(.95,.90,.50) # The CI's to plot * ) + xlab("marginal posterior") + scale_y_continuous( # No y-axis information NULL, breaks = NULL ) + scale_color_brewer() ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-61-1.png" width="432" /> ] --- ## Your turn .center2[ .UA-red[<b> .left-column[.Super[🏃] ] - Make a similar plot for the estimate of the standard deviation - With a CI of 50%, 80%, 89%, 95% </b> ] ] --- ## Solution .pull-left[ .scriptsize[ ```r posterior_samples(Model1_CJ) %>% select(sigma) %>% ggplot(aes(x = sigma, y = 0)) + * stat_interval( * .width = c(.95, .89,.80,.50) # The CI's to plot * ) + xlab("marginal posterior") + scale_y_continuous( NULL, breaks = NULL ) + scale_color_brewer() ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-63-1.png" width="432" /> ] --- ## We can also integrate plots for mu and sigma And we introduce the .UA-red[`stat_halfeye()`] function of `ggdist` .pull-left[ .footnotesize[ ```r names <- c("mu","sigma") posterior_samples(Model1_CJ) %>% select(b_Intercept,sigma) %>% set_names(names) %>% pivot_longer(everything()) %>% ggplot(aes(x = value, y = 0)) + * stat_halfeye(.width = .89, normalize = "panels") + scale_y_continuous(NULL, breaks = NULL) + xlab("marginal posterior") + facet_wrap(~name, scales = "free") ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-65-1.png" width="504" /> ] --- ## Your turn .center2[ .UA-red[<b> .left-column[.Super[🏃] ] - Try to replicate the graph - But with other CI's (e.g. for 50%, 90% and 95%) </b> ] ] --- ## Solution .pull-left[ .footnotesize[ ```r names <- c("mu","sigma") posterior_samples(Model1_CJ) %>% select(b_Intercept,sigma) %>% set_names(names) %>% pivot_longer(everything()) %>% ggplot(aes(x = value, y = 0)) + * stat_halfeye( * .width = c(.50, .90, .95), normalize = "panels") + scale_y_continuous(NULL, breaks = NULL) + xlab("marginal posterior") + facet_wrap(~name, scales = "free") ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-67-1.png" width="504" /> ] --- ## How to get rid of the log? We can simply do calculations with our posterior samples! So, we can just use `exp()`: .pull-left[ .footnotesize[ ```r names <- c("mu_log_scale","mu_sec", "sigma") posterior_samples(Model1_CJ) %>% select(b_Intercept,sigma) %>% * mutate( * mu_sec = exp(b_Intercept) * ) %>% # we do the select() again to reorder the columns # this is not super necessary, but then look out # with setting the names of the columns! * select(b_Intercept, mu_sec, sigma) %>% set_names(names) %>% pivot_longer(everything()) %>% ggplot(aes(x = value, y = 0)) + stat_halfeye(.width = c(.5, .9, .95), normalize = "panels") + scale_y_continuous(NULL, breaks = NULL) + xlab("marginal posterior") + facet_wrap(~name, scales = "free") ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-69-1.png" width="504" /> ] --- ## Question 2 .UA-red[<i><b> Does the time that people spend reading a text differs for both texts if we control for the length of the text? </i></b>] A model could look like: `$$\begin{aligned} & log(Time_{i}) \sim N(\mu,\sigma_{e_{i}})\\ & \mu = \beta_0 + \beta_1*\text{Right_Text}_{i} + \beta_2*\text{Text_Length}_{i} \\ \end{aligned}$$` ```r load(here("data", "Data_CJ2.Rdata")) head(Data_CJ2, 6) ``` ``` ## # A tibble: 6 x 7 ## # Groups: Partic, Compar [3] ## Partic Compar AOI_Type Dur_Seconds Dur_Log Right_Text Text_Length ## <dbl> <chr> <chr> <dbl> <dbl> <dbl> <dbl> ## 1 1 DHH 214-425 AOI_LEFT_TEXT 7.12 6.61 0 2.72 ## 2 1 DHH 214-425 AOI_RIGHT_TEXT 15.3 8.55 1 2.48 ## 3 1 DLL 508- 51 AOI_LEFT_TEXT 4.61 7.31 0 3.11 ## 4 1 DLL 508- 51 AOI_RIGHT_TEXT 3.28 6.63 1 3.13 ## 5 1 DMH 414-609 AOI_LEFT_TEXT 1.93 6.82 0 1.78 ## 6 1 DMH 414-609 AOI_RIGHT_TEXT 1.53 6.33 1 3.89 ``` --- ## Your turn .center2[ .UA-red[<b> .left-column[.Super[🏃] ] - Try to run the model with `brms` - Check model convergence - Make one plot to visualise the posteriors for the regression parameters </b> ] ] --- ## Solution ```r Model2_CJ <- brm( Dur_Log ~ 1 + Text_Length + Right_Text, data = Data_CJ2, family = "gaussian", backend = "cmdstanr", seed = 1975 ) ``` --- ## Solution Visual checking of convergence .pull-left[ ```r plot(Model2_CJ) ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-74-1.png" width="432" /> ] --- ## Solution: check output .small[ ```r summary(Model2_CJ) ``` ``` ## Family: gaussian ## Links: mu = identity; sigma = identity ## Formula: Dur_Log ~ 1 + Text_Length + Right_Text ## Data: Data_CJ2 (Number of observations: 499) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1; ## total post-warmup samples = 4000 ## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## Intercept 6.34 0.16 6.02 6.66 1.00 4980 3109 ## Text_Length 0.50 0.05 0.40 0.59 1.00 4968 3327 ## Right_Text -0.33 0.10 -0.52 -0.14 1.00 4424 2890 ## ## Family Specific Parameters: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## sigma 1.11 0.04 1.04 1.18 1.00 4759 3210 ## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS ## and Tail_ESS are effective sample size measures, and Rhat is the potential ## scale reduction factor on split chains (at convergence, Rhat = 1). ``` ] --- ## Solution: create plot on posteriors for beta's .pull-left[ ```r posterior_samples(Model2_CJ) %>% select( b_Intercept, b_Text_Length, b_Right_Text ) %>% pivot_longer(everything()) %>% ggplot(aes(x = value, y = 0)) + stat_halfeye( .width = c(.5, .9, .95), normalize = "panels") + scale_y_continuous(NULL, breaks = NULL) + xlab("marginal posterior") + facet_wrap(~name, scales = "free") ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-77-1.png" width="432" /> ] --- ## Let's introduce `mcmc_areas()` from the `bayesplot` .pull-left[ ```r mcmc_areas( Model2_CJ, pars = c("b_Text_Length", "b_Right_Text" ), prob = 0.89 ) ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-79-1.png" width="432" /> ] --- ## or `mcmc_areas_ridges` from `bayesplot` .pull-left[ ```r mcmc_areas_ridges( Model2_CJ, pars = c("b_Text_Length", "b_Right_Text" ), prob = 0.89 ) ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-81-1.png" width="432" /> ] --- ## or `mcmc_intervals` from `bayesplot` & let's play around with the `color_scheme_set()` that works with all `bayesplot` functions... .pull-left[ ```r color_scheme_set("red") mcmc_intervals( Model2_CJ, pars = c("b_Text_Length", "b_Right_Text"), prob = c(0.5, 0.89, 0.95) ) ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-83-1.png" width="432" /> ] --- ## CI's (ETI's) vs. HDI's <img src="Part1_files/figure-html/unnamed-chunk-84-1.png" width="504" /> --- ## Alternative for p-values? --- ## Alternative for p-values? .pull-left[ <img src="Kruchke_2018.jpg" width="99%" height="99%" /> ] .pull-right[ <b>.UA-red[ROPE] </b>: Region Of Practical Equivalence .SW-greenD[ <i> > Set a region of parameter values that can be considered equivalent to having no effect </i> ] - in standard effect sizes the advised default is a range of -0.1 to 0.1 - this equals half of a small effect size (Cohen, 1988) - all parameter values in that range are set equal to no effect ] --- ## Alternative for p-values? .pull-left[ <img src="Kruchke_2018.jpg" width="99%" height="99%" /> ] .pull-right[ <b>.UA-red[ROPE + HDI rule] </b> - 95% of HDI out of ROPE `\(\rightarrow\)` `\(H_0\)` rejected - 95% of HDI all in ROPE `\(\rightarrow\)` `\(H_0\)` not rejected - 95% of HDI partially out of ROPE `\(\rightarrow\)` undecided ] --- ## HDI + ROPE with `brms` results We can use the `equivalence_test()` function of the `bayestestR` package ```r equivalence_test(Model2_CJ) ``` ``` ## # Test for Practical Equivalence ## ## ROPE: [-0.12 0.12] ## ## Parameter | H0 | inside ROPE | 95% HDI ## ---------------------------------------------------- ## Intercept | Rejected | 0.00 % | [ 5.99 6.63] ## Text_Length | Rejected | 0.00 % | [ 0.41 0.59] ## Right_Text | Rejected | 0.00 % | [-0.52 -0.14] ``` --- ## Or the `parameters` package This package has the nice flexible `model_parameters()` function! .footnotezise[ ```r posterior_samples(Model2_CJ) %>% model_parameters(rope_range=c(-0.12,0.12)) ``` ``` ## # Fixed Effects ## ## Parameter | Median | 89% CI | pd | % in ROPE ## ----------------------------------------------------------------- ## b_Intercept | 6.34 | [ 6.07, 6.59] | 100% | 0% ## b_Text_Length | 0.50 | [ 0.42, 0.57] | 100% | 0% ## b_Right_Text | -0.34 | [ -0.49, -0.17] | 99.95% | 1.43% ## sigma | 1.11 | [ 1.05, 1.16] | 100% | 0% ## Intercept | 7.65 | [ 7.57, 7.73] | 100% | 0% ## lp__ | -761.62 | [-763.69, -760.08] | 100% | 0% ``` ] `pd` stands for <i>.SW-greenD[probability of direction]</i> --- ## Now it's getting more fun We can combine `model_parameters()` with `plot()` because the `see` package deals with the output! .pull-left[ ```r posterior_samples(Model2_CJ) %>% select( b_Right_Text, b_Text_Length ) %>% model_parameters( ) %>% plot(show_labels = TRUE, size_text = 3) ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-90-1.png" width="432" /> ] --- ## Now it's getting more fun And another one ... We can combine `equivalence_test` with `plot()` as well thx to the `see` package! .pull-left[ ```r equivalence_test(Model2_CJ) %>% plot( ) ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-92-1.png" width="432" /> ] --- <i>"Hey! You promised mixed effects model"</i> ## Question 3 .UA-red[<i><b> Does the time that people spend reading a text differs for both texts if we control for the length of the text? </i></b>] In the previous analyses we neglected that we have multiple observations per person! A mixed effects model could look like: `$$\begin{aligned} & log(Time_{ij}) \sim N(\mu,\sigma_{e_{ij}})\\ & \mu = \beta_0 + \beta_1*\text{Right_Text}_{i} + \beta_2*\text{Text_Length}_{i} + \mu_j\\ & \mu_j \sim N(0,\sigma_{u_j}) \end{aligned}$$` --- ## Adding random term to the model ```r Model3_CJ <- brm( * Dur_Log ~ Text_Length + Right_Text + (1|Partic), data = Data_CJ2, family = "gaussian", backend = "cmdstanr", seed = 1975 ) ``` ---- .UA-red[<b> .left-column[.Super[🏃] ] - Estimate the model - Check the output - Interpret the results </b> ] --- ## Solution .footnotesize[ ```r Model3_CJ <- readRDS(here("models", "Model3_CJ.RDS")) summary(Model3_CJ) ``` ``` ## Family: gaussian ## Links: mu = identity; sigma = identity ## Formula: Dur_Log ~ 1 + Text_Length + Right_Text + (1 | Partic) ## Data: Data_CJ2 (Number of observations: 499) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1; ## total post-warmup samples = 4000 ## *## Group-Level Effects: *## ~Partic (Number of levels: 26) *## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS *## sd(Intercept) 0.62 0.11 0.45 0.87 1.00 1014 1218 ## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## Intercept 6.63 0.19 6.25 7.01 1.00 1543 2156 ## Text_Length 0.39 0.04 0.31 0.48 1.00 6940 2934 ## Right_Text -0.35 0.08 -0.52 -0.19 1.00 6448 2957 ## ## Family Specific Parameters: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## sigma 0.95 0.03 0.89 1.01 1.00 5550 2644 ## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS ## and Tail_ESS are effective sample size measures, and Rhat is the potential ## scale reduction factor on split chains (at convergence, Rhat = 1). ``` ] --- ## What do we get? If we want to plot information on the variances (or sd) we have to know the names of elements .footnotesize[ ```r posterior_samples(Model3_CJ) %>% str() ``` ``` ## 'data.frame': 4000 obs. of 59 variables: ## $ b_Intercept : num 6.63 6.79 6.66 6.74 6.57 ... ## $ b_Text_Length : num 0.419 0.396 0.404 0.347 0.373 ... ## $ b_Right_Text : num -0.309 -0.403 -0.288 -0.258 -0.286 ... *## $ sd_Partic__Intercept : num 0.505 0.531 0.537 0.637 0.589 ... ## $ sigma : num 0.928 0.937 0.939 0.936 0.874 ... ## $ Intercept : num 7.73 7.78 7.72 7.65 7.54 ... *## $ r_Partic[1,Intercept] : num -0.141 -0.673 -0.112 0.07 -0.57 ... *## $ r_Partic[2,Intercept] : num 0.2899 -0.0303 0.5392 0.2669 0.5086 ... *## $ r_Partic[3,Intercept] : num 0.619 0.767 0.996 1.152 0.998 ... *## $ r_Partic[4,Intercept] : num -0.3145 0.0216 -0.3654 0.1749 0.0874 ... *## $ r_Partic[5,Intercept] : num -0.3268 -0.0846 0.1697 -0.0618 -0.1935 ... *## $ r_Partic[6,Intercept] : num 0.492 0.142 0.256 0.332 0.393 ... *## $ r_Partic[7,Intercept] : num -0.617 -0.777 -0.576 -0.444 -0.248 ... *## $ r_Partic[8,Intercept] : num 0.1582 -0.1739 -0.0464 0.0666 0.1937 ... *## $ r_Partic[9,Intercept] : num 0.1173 -0.4262 -0.2635 -0.4233 0.0362 ... *## $ r_Partic[10,Intercept]: num -0.0433 0.2303 -0.0641 0.3779 0.061 ... *## $ r_Partic[11,Intercept]: num 0.0683 0.4425 0.1351 0.0419 0.4656 ... *## $ r_Partic[12,Intercept]: num -0.0778 0.5016 0.1428 0.1423 0.1359 ... *## $ r_Partic[13,Intercept]: num 0.533 0.211 0.403 0.8 0.465 ... *## $ r_Partic[14,Intercept]: num -0.0189 0.7486 0.2406 0.8047 0.8817 ... *## $ r_Partic[15,Intercept]: num 0.552 0.526 0.492 0.843 0.495 ... *## $ r_Partic[16,Intercept]: num -1.06 -1.61 -1.61 -1.53 -1.62 ... *## $ r_Partic[17,Intercept]: num 0.293 -0.11 -0.366 0.151 0.181 ... *## $ r_Partic[18,Intercept]: num 0.358 0.562 0.7 0.415 0.357 ... *## $ r_Partic[19,Intercept]: num -0.898 -0.246 -0.3 -0.456 -0.226 ... *## $ r_Partic[20,Intercept]: num -0.892 -0.2 -0.697 -0.315 -0.5 ... *## $ r_Partic[21,Intercept]: num 0.3688 -0.1558 0.099 0.6076 0.0982 ... ## $ r_Partic[22,Intercept]: num 0.2282 -0.4344 -0.1846 -0.0366 -0.0649 ... ## $ r_Partic[23,Intercept]: num 0.749 0.76 0.743 0.904 0.707 ... ## $ r_Partic[24,Intercept]: num -0.792 -0.671 -0.647 -0.726 -0.701 ... ## $ r_Partic[25,Intercept]: num -0.302 -0.384 -0.14 -0.312 -0.306 ... ## $ r_Partic[26,Intercept]: num -0.599 -1.12 -0.663 -1.047 -1.045 ... ## $ lp__ : num -726 -730 -726 -722 -725 ... ## $ z_1[1,1] : num -0.28 -1.267 -0.208 0.11 -0.968 ... ## $ z_1[1,2] : num 0.5736 -0.0571 1.0042 0.4188 0.8632 ... ## $ z_1[1,3] : num 1.22 1.44 1.86 1.81 1.69 ... ## $ z_1[1,4] : num -0.6223 0.0406 -0.6806 0.2744 0.1483 ... ## $ z_1[1,5] : num -0.647 -0.159 0.316 -0.097 -0.329 ... ## $ z_1[1,6] : num 0.974 0.267 0.476 0.52 0.667 ... ## $ z_1[1,7] : num -1.221 -1.464 -1.072 -0.697 -0.421 ... ## $ z_1[1,8] : num 0.3131 -0.3275 -0.0864 0.1046 0.3287 ... ## $ z_1[1,9] : num 0.2322 -0.8027 -0.4908 -0.6641 0.0614 ... ## $ z_1[1,10] : num -0.0858 0.4337 -0.1193 0.5929 0.1036 ... ## $ z_1[1,11] : num 0.1351 0.8334 0.2516 0.0658 0.7903 ... ## $ z_1[1,12] : num -0.154 0.945 0.266 0.223 0.231 ... ## $ z_1[1,13] : num 1.055 0.398 0.751 1.255 0.788 ... ## $ z_1[1,14] : num -0.0374 1.4099 0.4482 1.2623 1.4965 ... ## $ z_1[1,15] : num 1.091 0.99 0.916 1.323 0.84 ... ## $ z_1[1,16] : num -2.1 -3.02 -3 -2.4 -2.75 ... ## $ z_1[1,17] : num 0.579 -0.208 -0.682 0.237 0.307 ... ## $ z_1[1,18] : num 0.708 1.059 1.304 0.651 0.606 ... ## $ z_1[1,19] : num -1.777 -0.463 -0.559 -0.715 -0.384 ... ## $ z_1[1,20] : num -1.765 -0.376 -1.298 -0.494 -0.848 ... ## $ z_1[1,21] : num 0.73 -0.293 0.184 0.953 0.167 ... ## $ z_1[1,22] : num 0.4516 -0.818 -0.3438 -0.0574 -0.1101 ... ## $ z_1[1,23] : num 1.48 1.43 1.38 1.42 1.2 ... ## $ z_1[1,24] : num -1.57 -1.26 -1.21 -1.14 -1.19 ... ## $ z_1[1,25] : num -0.598 -0.723 -0.261 -0.49 -0.52 ... ## $ z_1[1,26] : num -1.19 -2.11 -1.23 -1.64 -1.77 ... ``` ] --- # Your turn! .UA-red[<b> .left-column[.Super[🏃] ] - Make a plot to summarise the posterior for sigma & the sd for participants - If your quick! Play around with different options we saw </b> ] --- ## A possible solution: .pull-left[ ```r posterior_samples(Model3_CJ) %>% select( sigma, sd_Partic__Intercept ) %>% model_parameters( ) %>% plot(show_labels = TRUE, size_text = 3) ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-97-1.png" width="432" /> ] --- ## Make plot for random effects We can also make plots for all mu_j's (aka: the specific residuals for each participant) .pull-left[ ```r posterior_samples(Model3_CJ) %>% select( starts_with("r_Partic") ) %>% pivot_longer( starts_with("r_Partic") ) %>% mutate( mu_j = value ) %>% ggplot( aes(x = mu_j, y = reorder(name, mu_j)) ) + stat_pointinterval( point_interval = mean_qi, .width = .89, size = 1/6 ) + scale_y_discrete("") ``` ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-99-1.png" width="432" /> ] --- ## Make plot for random effects Remember! We can simply calculate with the posterior! So, we can plot the differences between our participants by using mu_j and the Intercept estimate (6.63). Sum them together and take the `exp()` .pull-left[ .small[ ```r posterior_samples(Model3_CJ) %>% select( starts_with("r_Partic") ) %>% pivot_longer( starts_with("r_Partic") ) %>% mutate( * mu_j = exp(6.63 + value) ) %>% ggplot( aes(x = mu_j, y = reorder(name, mu_j)) ) + stat_pointinterval( point_interval = mean_qi, .width = .89, size = 1/6 ) + scale_y_discrete("") + scale_x_continuous("Reading time (in seconds)") ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-101-1.png" width="432" /> ] --- ## Another great package `sjPlots` .pull-left[ ```r library(sjPlot) tab_model(Model3_CJ) ``` ] .pull-right[ <table style="border-collapse:collapse; border:none;"> <tr> <th style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; text-align:left; "> </th> <th colspan="2" style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; ">Dur Log</th> </tr> <tr> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; text-align:left; ">Predictors</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">Estimates</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">CI (95%)</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Intercept</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.63</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.25 – 7.01</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Text Length</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.39</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.31 – 0.48</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Right Text</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.34</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.52 – -0.19</td> </tr> <tr> <td colspan="3" style="font-weight:bold; text-align:left; padding-top:.8em;">Random Effects</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">σ<sup>2</sup></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.90</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">τ<sub>00</sub> <sub>Partic</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.39</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">ICC</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.30</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">N <sub>Partic</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">26</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm; border-top:1px solid;">Observations</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left; border-top:1px solid;" colspan="2">499</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">Marginal R<sup>2</sup> / Conditional R<sup>2</sup></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.133 / 0.405</td> </tr> </table> ] --- ## Combine models output in a table ```r tab_model(Model2_CJ, Model3_CJ) ``` <table style="border-collapse:collapse; border:none;"> <tr> <th style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; text-align:left; "> </th> <th colspan="2" style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; ">Dur Log</th> <th colspan="2" style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; ">Dur Log</th> </tr> <tr> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; text-align:left; ">Predictors</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">Estimates</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">CI (95%)</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">Estimates</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">CI (95%)</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Intercept</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.34</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.02 – 6.66</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.63</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.25 – 7.01</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Text Length</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.50</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.40 – 0.59</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.39</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.31 – 0.48</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Right Text</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.34</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.52 – -0.14</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.34</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.52 – -0.19</td> </tr> <tr> <td colspan="5" style="font-weight:bold; text-align:left; padding-top:.8em;">Random Effects</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">σ<sup>2</sup></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2"> </td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.90</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">τ<sub>00</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2"> </td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.39 <sub>Partic</sub></td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">ICC</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2"> </td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.30</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">N</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2"> </td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">26 <sub>Partic</sub></td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm; border-top:1px solid;">Observations</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left; border-top:1px solid;" colspan="2">499</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left; border-top:1px solid;" colspan="2">499</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">R<sup>2</sup> Bayes</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.186</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.133 / 0.405</td> </tr> </table> --- ## How to tweak the estimation? .SW-greenD[Set the number of chains; the number of iterations; the number of warm-up] ```r Model3_CJ <- brm( Dur_Log ~ Text_Length + Right_Text + (1|Partic), data = Data_CJ2, family = "gaussian", backend = "cmdstanr", * iter = 3000, * chains = 8, * warmup = 1500, seed = 1975 ) ``` Tip: it's better to run less chains and more iterations per chain, so you get more precise parameter estimation --- ## How to tweak the estimation? .SW-greenD[Increase efficiency] .small[ ```r Model3_CJ <- brm( Dur_Log ~ Text_Length + Right_Text + (1|Partic), data = Data_CJ2, family = "gaussian", backend = "cmdstanr", iter = 3000, chains = 8, warmup = 1500, cores = 4, seed = 1975 ) ``` ] Modern computers have multiple cores `\(\rightarrow\)` let them work simultaneously --- ## Question 4 <i> Hey! It's still the same question! </i> .UA-red[<i><b> Does the time that people spend reading a text differs for both texts if we control for the length of the text? </i></b>] In the previous analyses we neglected that we have multiple observations per pair of texts! # Your turn! You have the power! .left-column[  ] .right-column[ .UA-red[<b> .left-column[.Super[🏃] ] - Do the necessary analyses - Make a plot to summarise the posterior for sigma & the sd's - If your quick! Play around with different options we saw... </b> ] ] --- ## Solution: ```r Model4_CJ <- brm( Dur_Log ~ 1 + Text_Length + Right_Text + (1|Partic) + (1|Compar), data = Data_CJ2, family = "gaussian", backend = "cmdstanr", cores = 4, seed = 1975 ) ``` --- .footnotesize[ ```r Model4_CJ <- readRDS(here("models", "Model4_CJ.RDS")) summary(Model4_CJ) ``` ``` ## Family: gaussian ## Links: mu = identity; sigma = identity ## Formula: Dur_Log ~ 1 + Text_Length + Right_Text + (1 | Partic) + (1 | Compar) ## Data: Data_CJ2 (Number of observations: 499) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1; ## total post-warmup samples = 4000 ## ## Group-Level Effects: ## ~Compar (Number of levels: 30) ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## sd(Intercept) 0.14 0.07 0.01 0.28 1.00 1179 1770 ## ## ~Partic (Number of levels: 26) ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## sd(Intercept) 0.63 0.11 0.45 0.88 1.00 967 1194 ## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## Intercept 6.63 0.19 6.23 6.99 1.00 1304 2184 ## Text_Length 0.39 0.04 0.31 0.48 1.00 4341 3143 ## Right_Text -0.35 0.09 -0.52 -0.18 1.00 4095 2984 ## ## Family Specific Parameters: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS ## sigma 0.94 0.03 0.88 1.00 1.00 3609 2829 ## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS ## and Tail_ESS are effective sample size measures, and Rhat is the potential ## scale reduction factor on split chains (at convergence, Rhat = 1). ``` ] --- ## But is it a good model? .center2[ Two complementary procedures: - posterior-predictive check - compare models with .SW-greenD[<i>leave one out cross-validation</i>] ] --- # Posterior-predictive check A visual check that can be performed with `pp_check()` from `brms` .small[ ```r pp_check(Model4_CJ) ``` <img src="Part1_files/figure-html/unnamed-chunk-109-1.png" width="360" /> ] --- ## Model comparison with loo cross-validation `\(\sim\)` AIC or BIC in Frequentist statistics `\(\widehat{elpd}\)`: "expected log predictive density" (higher `\(\widehat{elpd}\)` implies better model fit without being sensitive for overfitting!) .footnotesize[ ```r loo_Model2 <- loo(Model2_CJ) loo_Model3 <- loo(Model3_CJ) loo_Model4 <- loo(Model4_CJ) Comparison<- loo_compare(loo_Model2, loo_Model3, loo_Model4) print(Comparison, simplify = F) ``` ``` ## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic ## Model4_CJ 0.0 0.0 -693.8 17.7 33.5 2.4 1387.7 ## Model3_CJ -0.4 1.5 -694.3 17.8 25.7 1.9 1388.5 ## Model2_CJ -67.0 11.2 -760.8 15.7 4.1 0.3 1521.7 ## se_looic ## Model4_CJ 35.3 ## Model3_CJ 35.6 ## Model2_CJ 31.3 ``` ] --- ## What about setting your own priors? `brms` has some default priors programmed (Uninformative priors)! But: .UA-red[WAMBS] (when to <b>W</b>orry and how to <b>A</b>void <b>M</b>isuse of <b>B</b>ayesian <b>S</b>tatistics) (Rens van de Schoot et al. (2021)) <i> .SW-greenD[ > "Ensure the prior distributions and the model or likelihood are well understood and described in detail in the text. Prior-predictive checking can help identify any prior-data conflict" ] --- ## What about setting your own priors? Let's first see how to get to know which priors are set by `brms` in the model we defined. `get_prior()` function .footnotesize[ ```r get_prior( Dur_Log ~ 1 + Text_Length + Right_Text + (1|Partic) + (1|Compar), data = Data_CJ2, family = "gaussian") ``` ``` ## prior class coef group resp dpar nlpar bound ## (flat) b ## (flat) b Right_Text ## (flat) b Text_Length ## student_t(3, 7.5, 2.5) Intercept ## student_t(3, 0, 2.5) sd ## student_t(3, 0, 2.5) sd Compar ## student_t(3, 0, 2.5) sd Intercept Compar ## student_t(3, 0, 2.5) sd Partic ## student_t(3, 0, 2.5) sd Intercept Partic ## student_t(3, 0, 2.5) sigma ## source ## default ## (vectorized) ## (vectorized) ## default ## default ## (vectorized) ## (vectorized) ## (vectorized) ## (vectorized) ## default ``` ] --- ## Visualise priors Imagine you want to visualise the prior for the `Intercept` & also visualise an alternative prior: `\(N(6,2)\)` .pull-left[ .footnotesize[ ```r library(metRology) # to use dt.scaled() # Create a vector with possible Dur_log values # and call this vector x x <- seq(1,20,by=.1) # probability density for our own prior # saved in the vector custom custom <- dnorm(x,6,2) # probability density for brms prior # saved in the vector brms_default brms_default <- dt.scaled(x,3,7.5,2.5) # Plot both data_frame(x,custom,brms_default) %>% pivot_longer( c(custom,brms_default), names_to = "Prior") %>% ggplot( aes(x = x, y = value, lty = Prior)) + geom_line() + scale_x_continuous( "Dur_log",limits = c(1,20)) + scale_y_continuous( "probability density") ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-113-1.png" width="432" /> ] --- ## Change priors in `brms` We use `set_prior()` to define priors and `prior = ... ` in the `brm()` part .footnotesize[ ```r # Create an object with the definition of the priors you want to define manually priors_M4 <- c( set_prior("normal(6,2)", class = "Intercept")) Model4b_CJ <- brm( Dur_Log ~ 1 + Text_Length + Right_Text + (1|Partic) + (1|Compar), data = Data_CJ2, family = "gaussian", backend = "cmdstanr", * prior = priors_M4, cores = 4, seed = 1975 ) ``` ] if we want to add other manually defined priors as well, we can by doing something like: .footnotesize[ ```r priors_Manually <- c( set_prior("normal(6,2)", class = "Intercept"), set_prior("normal(0,3)", class = "b", coef = "Right_Text"), ... ) ``` ] --- ## Let's think about the effects we expect Bürkner: .SW-greenD[ <i> > The default prior for population-level effects (including monotonic and category specific effects) is an improper flat prior over the reals. </i> https://rdrr.io/cran/brms/man/set_prior.html ] If you know that sd for `Dur_Log` equals 1.22, what could be a good prior (not to informative) for the effect of: - `Text_Length` - `Right_Text` --- ## Some possibilities Option 1: `\(N(0,2)\)` or Option 2: `\(N(0,4)\)` .pull-left[ .footnotesize[ ```r x <- seq(-8,8,by=.1) # probability densities for both option1 <- dnorm(x,0,2) option2 <- dnorm(x,0,4) # Plot both data_frame(x, option1, option2) %>% pivot_longer( c(option1, option2), names_to = "Prior") %>% ggplot( aes(x = x, y = value, lty = Prior)) + geom_line() + scale_x_continuous( "Dur_log",limits = c(-8,8)) + scale_y_continuous( "probability density") ``` ] ] .pull-right[ <img src="Part1_files/figure-html/unnamed-chunk-117-1.png" width="432" /> ] --- ## Your turn! .UA-red[<b> .left-column[.Super[🏃] ] - Re-estimate the model 4 with a custom prior for both regression effects - Use `tab_model()` to summarize both models: this model and the model with the `brms` default priors - Big changes? </b> ] Remember:  --- ## Possible solution ```r # Set the priors priors_M4b <- c( set_prior("normal(0,2)", class = "b", coef = "Right_Text"), set_prior("normal(0,2)", class = "b", coef = "Text_Length") ) # Estimate the model Model4b_CJ <- brm( Dur_Log ~ 1 + Text_Length + Right_Text + (1|Partic) + (1|Compar), data = Data_CJ2, family = "gaussian", prior = priors_M4b, backend = "cmdstanr", cores = 4, seed = 1975 ) # Summarize both models side by side tab_model(Model4_CJ, Model4b_CJ) ``` --- ## Possible solution <table style="border-collapse:collapse; border:none;"> <tr> <th style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; text-align:left; "> </th> <th colspan="2" style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; ">Dur Log</th> <th colspan="2" style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; ">Dur Log</th> </tr> <tr> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; text-align:left; ">Predictors</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">Estimates</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">CI (95%)</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">Estimates</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">CI (95%)</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Intercept</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.63</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.23 – 6.99</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.64</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">6.25 – 7.01</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Text Length</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.39</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.31 – 0.48</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.39</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.30 – 0.48</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Right Text</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.35</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.52 – -0.18</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.35</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.51 – -0.18</td> </tr> <tr> <td colspan="5" style="font-weight:bold; text-align:left; padding-top:.8em;">Random Effects</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">σ<sup>2</sup></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.88</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.88</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">τ<sub>00</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.02 <sub>Compar</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.02 <sub>Compar</sub></td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;"></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.39 <sub>Partic</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.39 <sub>Partic</sub></td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">ICC</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.32</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.32</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">N</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">26 <sub>Partic</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">26 <sub>Partic</sub></td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;"></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">30 <sub>Compar</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">30 <sub>Compar</sub></td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm; border-top:1px solid;">Observations</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left; border-top:1px solid;" colspan="2">499</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left; border-top:1px solid;" colspan="2">499</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">Marginal R<sup>2</sup> / Conditional R<sup>2</sup></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.133 / 0.418</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="2">0.132 / 0.417</td> </tr> </table> --- ## Where to get help? Now that you're ready to set your steps in .SW-greenD[Bayesian Mixed Effects Models] there is a high probability that this will happen sometime: .pull-left[ <img src="computer-says-no.jpeg" width="99%" height="99%" /> ] .pull-right[ This is a great source to learn more about `Stan`; `brms` & `Hamiltonian MCMC`: https://discourse.mc-stan.org/t/divergent-transitions-a-primer/17099] --- ## To wrap-up: why `brms` ? - .UA-red[`brms`] is more versatile than `lme4` allowing also to estimate - multivariate models - finite mixture models - generalized mixed effects models - splines - ... - .UA-red[`brms`] as stepping-stone to learn `Stan` --- class: inverse-blue, center, middle ## Some references & appendices .footnote[[<i> get me back to the topic slide</i>](#topicslide)] --- ## Some books <img src="cover_Lambert.jpg" width="90%" height="90%" /> --- ## Some books <img src="cover_rethinking2.jpg" width="90%" height="90%" /> --- ## Some free online books - Bayes Rules!: https://www.bayesrulesbook.com/ - Last Friday I learned about this book: https://vasishth.github.io/bayescogsci/book/ --- ## Rens van de Schoot In <i>Nature Reviews</i> <img src="Rens_NatureReviews.jpg" width="90%" height="90%" /> --- ## THE Podcast .center2[ If you like running - like I do - this could be a great companion on your run! https://www.learnbayesstats.com/ ] --- ## Site on creating the graphs .center2[ There are many blogs and websites that you can consult if you want to find out more about making graphs. <br> One that I often fall back to is: <br> http://mjskay.github.io/tidybayes/ ] --- ## Examplary paper with an associated 'Walkthrough in R': https://rpubs.com/jensroes/765467 <img src="Paper_Jens.jpg" width="90%" height="90%" /> --- class: inverse, center, bottom background-image: url(marko-pekic-IpLa37Uj2Dw-unsplash.jpg) background-size: contain # Questions? Do not hesitate to contact me! sven.demaeyer@uantwerpen.be --- class: center, middle name: tweet_dplyr <blockquote class="twitter-tweet"><p lang="en" dir="ltr"><a href="https://twitter.com/hashtag/dplyr?src=hash&ref_src=twsrc%5Etfw">#dplyr</a> in 6 tweets. Using the starwars dataset.<br><br>1. select() columns<a href="https://twitter.com/hashtag/tidyverse?src=hash&ref_src=twsrc%5Etfw">#tidyverse</a> <a href="https://twitter.com/hashtag/RStats?src=hash&ref_src=twsrc%5Etfw">#RStats</a> <a href="https://t.co/juGZbGeT9t">pic.twitter.com/juGZbGeT9t</a></p>— Hari (@illustratedbyte) <a href="https://twitter.com/illustratedbyte/status/1432416532391514113?ref_src=twsrc%5Etfw">August 30, 2021</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script> [back to Prerequisites](#Prerequisites)